RocksDB-Learned Range Filter

- LeRF: A Learned Range Filter for Key-Value Stores

- AegisKV: A Range-query Optimized Key-Value Store via Learned Range Filter and Efficient Partitioning

- AegisKV: Optimizing Range-query for key-value store optimization

- 投稿目标

filter单独投一篇:偏机器学习投NeurIPS / 偏kv投系统会议- 范围优化kv:引用上篇;删除优化;异步scan

Abstract

Introduce

- 范围查询性能问题

- 大规模删除导致的

Background and Motivation

- LSM-tree and Rocksdb

- Range Query

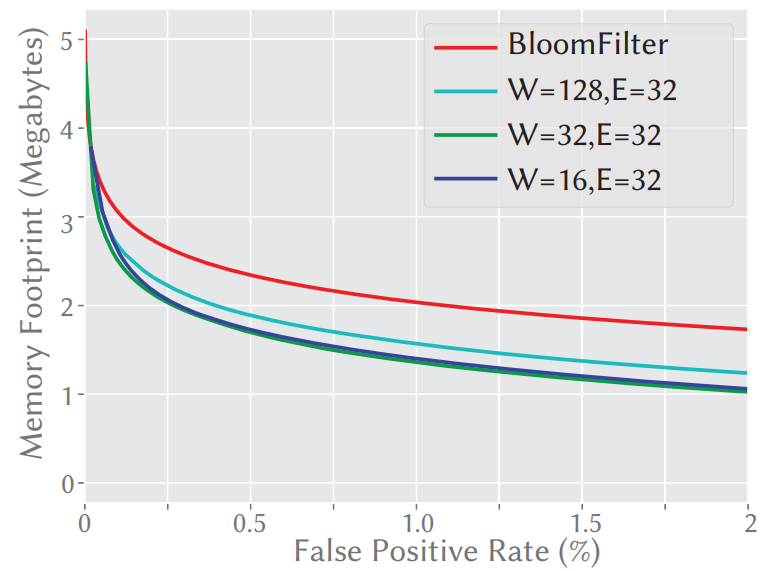

- Range Filter

Rosetta, surf,

- System Stalls

Design

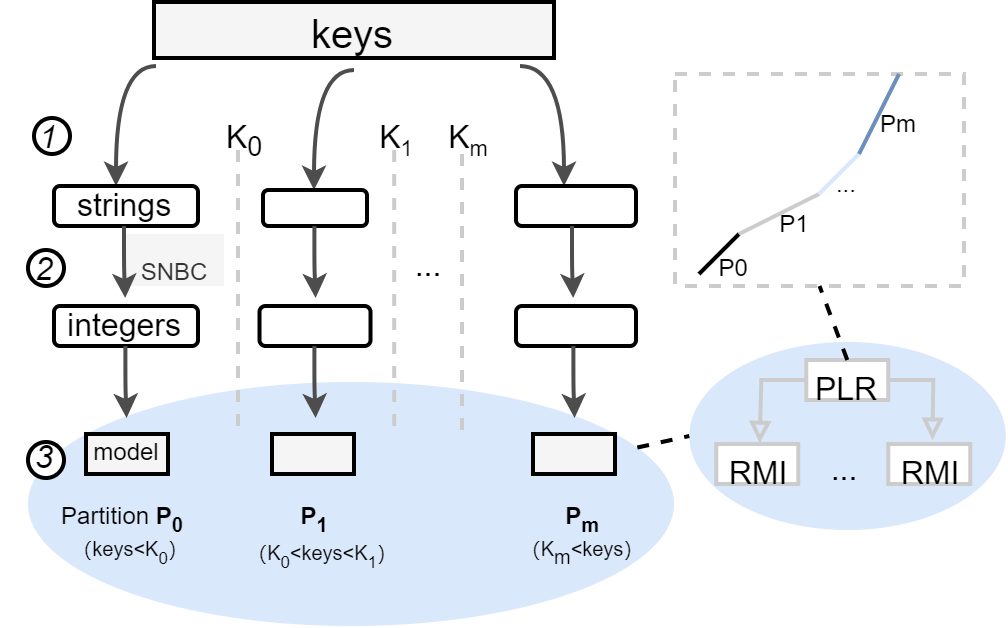

- Overall System Architecture

Learned Range Filter

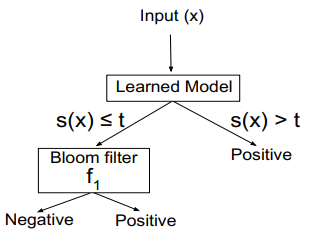

- 把过滤器当作分类问题

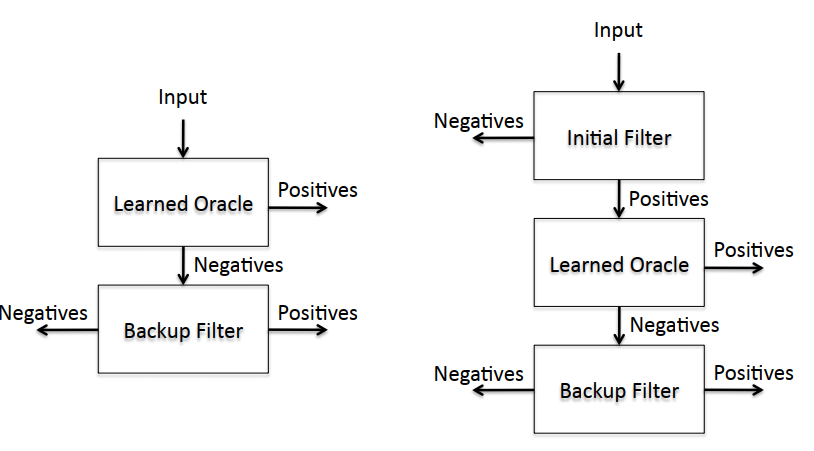

- 保证没有假阴性:备份过滤器

- 分类算法

- 减少误差率

Partition Scheduler

Implementation && Evaluation

Related Work

Conclusion

相关文章

- filter特征

- 存在性索引:0/1分类问题

- allow false positives, but will not give false negative (有不一定有,没有一定没有)

- tradeoff:空间与精度

bloom filter

a set S ={x1,x2,xn} of n keys. It consists of an array of m bits and uses k independent hash functions {h1, h2, hk} with the range of each hi being integer values between 0 and m - 1

FPR(false positive rate) \(FPR = (1-(1-1/m)^{kn})^k\)

bloom filter的问题

- 对数据不感知,空间开销大,误报率高:Learned Filter

- 不支持范围查询:SuRF,Rosetta

- 性能低,开销大:Chucky

- 之前的SSD/HDD等访问延迟较大,Bloom filter引起的开销比重较小,可以忽略不计

- NVMe SSD性能提升,与DRAM性能差距进一步缩小,由于在每层都要维护Bloom filter,会引起比较大的查询延迟,引起LSM-Tree中Bloom filter成为新的瓶颈之一

相关文章 Prediction and filtering

- Range Filter for KV Stores

- Learned Filter

论文1-1 SuRF: Practical Range Query Filtering with Fast Succinct Tries

Sigmod‘18 Best Paper,CMU Andrew Pavlo团队

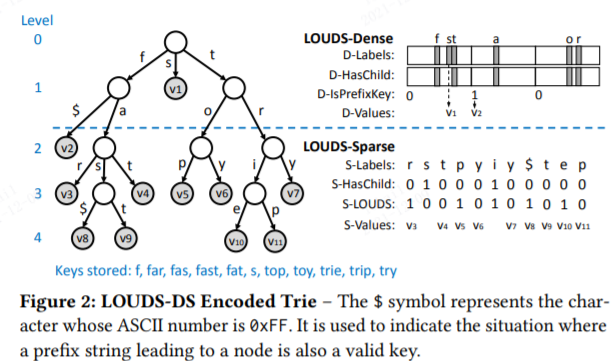

提出了一种新的数据结构 SuRF (Succinct Range Filter),是基于 FST (Fast Succinct Tries) 构建的一种能够支持点查询和范围查询的数据结构。FST 采用 LOUDS-DS 编码字典树, 该字典树上层节点较少, 由访问频繁的热数据组成; 下层包含大部分的节点, 由冷数据组成。LOUDS-DS 采用层内有序的布局, 利用这个特点, LOUDS-DS 能够有效地支持范围查询

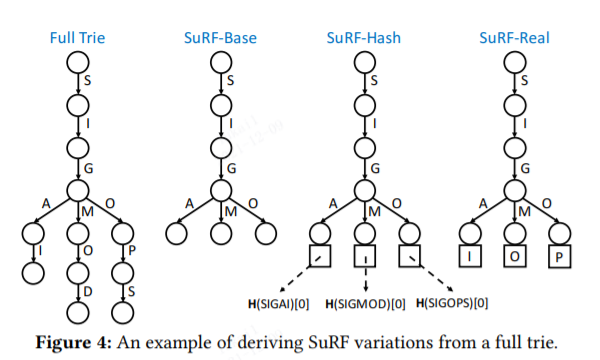

Full Tire 能够准确查找每一条数据, 但是会占用大量的内存资源。为了平衡过滤器的误判率和所需的内存资源,SuRF 采用了剪枝字典树, 并提出了不同的优化方案:

- SuRF-Base 只存储能够识别每个主键的最小长度的主键前缀, 但是这种方法对于主键前缀相似度较高的场景会带来较大的误判率。例如, 实验表明,对于邮件地址作为主键的数据会带来将近 25% 的误判率

- 为了降低 SuRF-Base 的误判率, SuRF-Hash为每个主键增加了一些散列位, 查询时通过哈希函数映射到对应位检查目标数据是否存在。实验表明,SuRF-Hash 只需 2 ~ 4 个散列位就可将误判率降低至 1%。虽然 SuRF-Hash 能够有效地减低误判率,但是并不能提高范围查询的性能

- 与 SuRF-Hash 不同的是, SuRF-Real 在每个主键前缀的后面存储n 个主键位增加主键的区分度, 在降低误判率的同时提升了点查询和范围查询的性能, 但是由于有些主键的前缀区别较小, SuRF-Real 的误判率不如 SuRF-Hash低

- SuRF-Mixed 结合了 SuRF-Hash 和SuRF-Real 的优点, 能够有效地支持点查询和范围查询

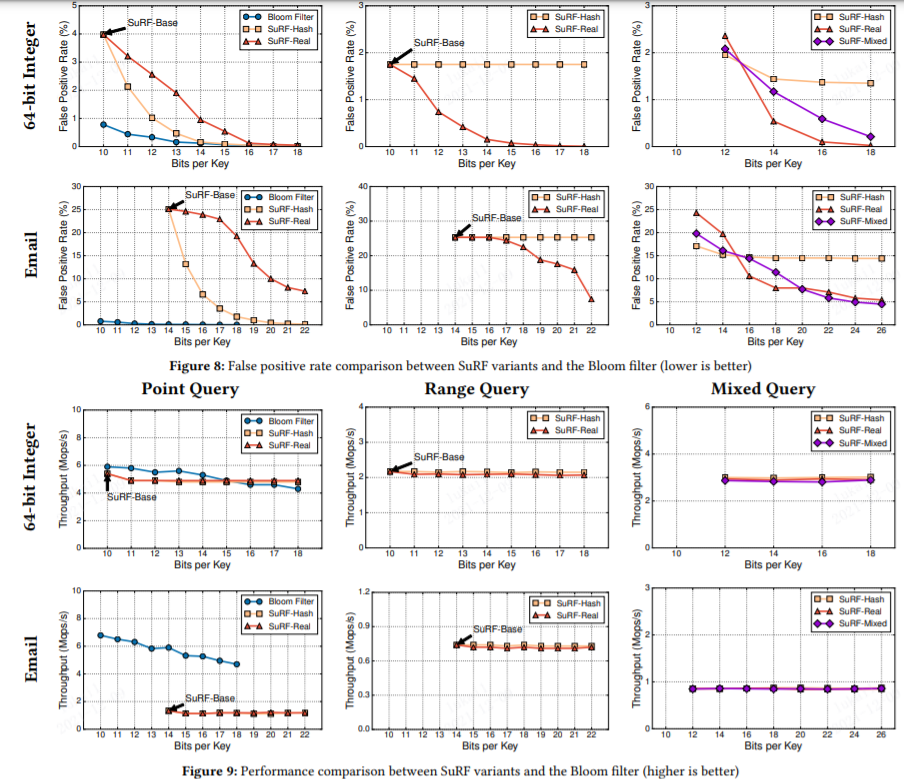

测试

代码:https://github.com/efcient/SuRF

指标:false positive rate (FPR), performance, and space

负载:YCSB,email地址数据

- The datasets are 100M 64-bit random integer keys and 25M email keys

- We test two representative key types: 64-bit random integers generated by YCSB and email addresses (host reversed, e.g., “com.domain@foo”) drawn from a real-world dataset (average length = 22 bytes, max length = 129 bytes).

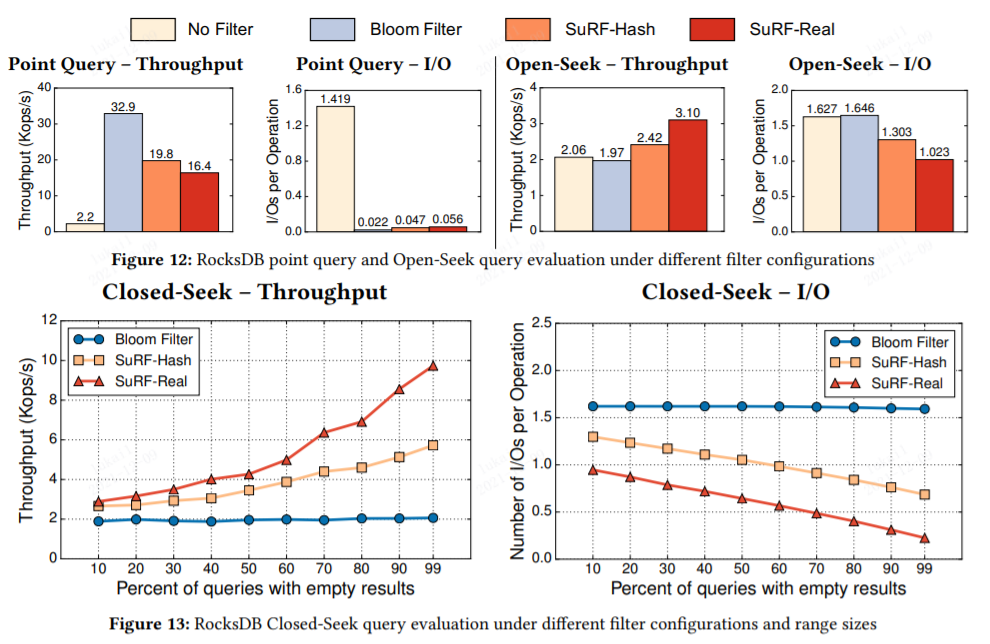

应用:Rocksdb测试,YCSB负载

- 操作:We first warm the cache with 1M uniformly-distributed point queries to existing keys so that every SSTable is touched ∼ 1000 times and the block indexes and filters are cached. After the warm-up, both RocksDB’s block cache and the OS page cache are full. We then execute 50K application queries, recording the end-to-end throughput and I/O counts

摒弃Excel!

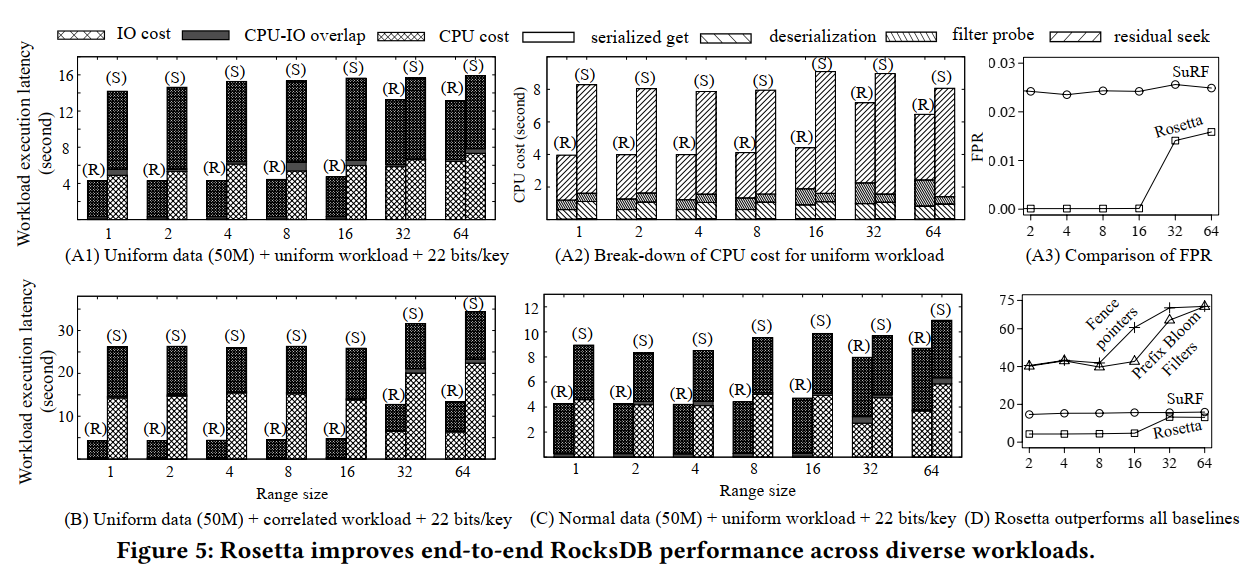

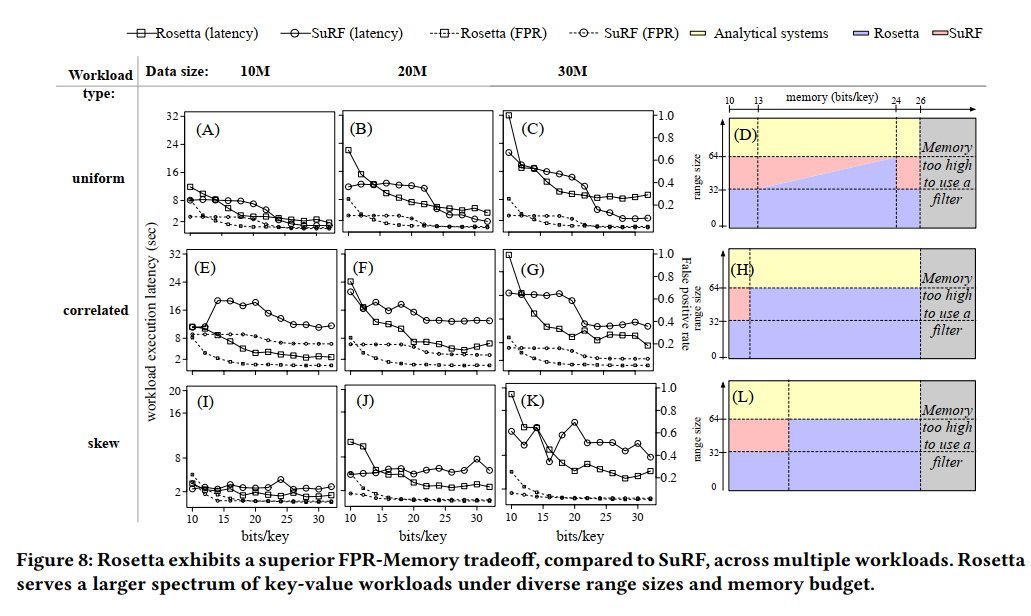

论文1-2 Rosetta: A Robust Space-Time Optimized Range Filter for Key-Value Stores

- SIGMOD’20,哈佛大学Stratos Idreos团队,Monkey,Dosteovsky,,,

- Rosetta:优化SuRF的范围查询能力

- 问题1:Short and Medium Range Queries are Significantly Sub-Optimal

- 问题2:Lack of Support for Workloads With Key Query Correlation or Skew

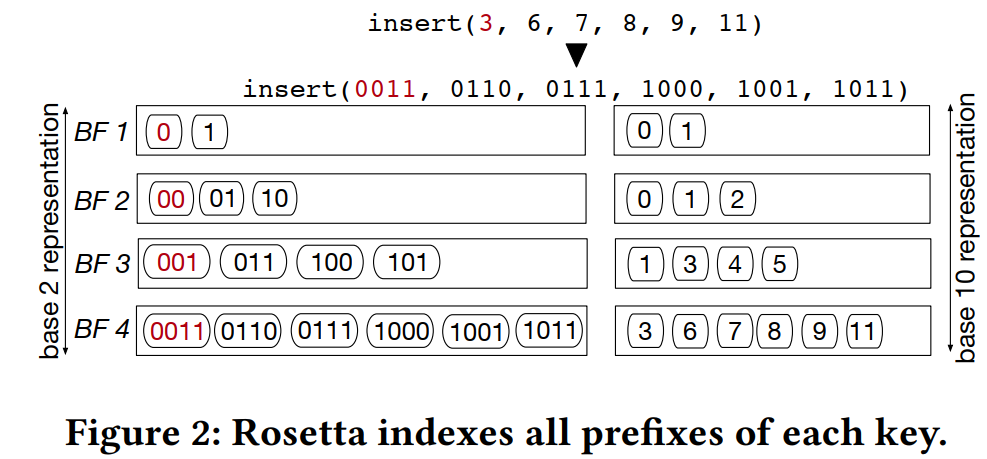

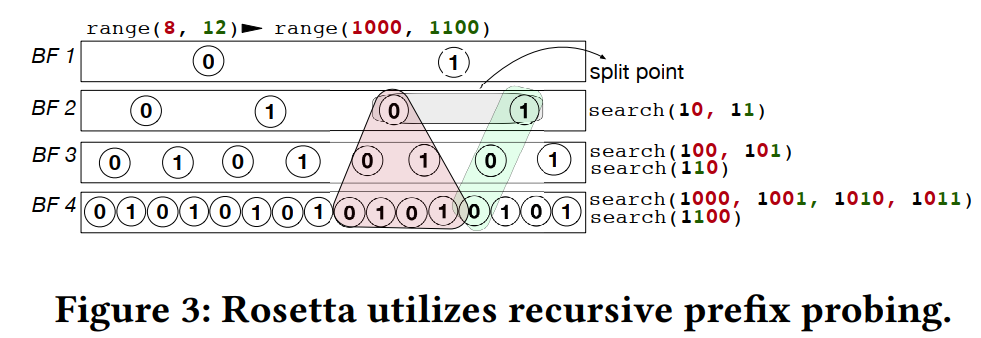

设计:使用一组分层排列的布隆过滤器对每个主键的二进制前缀建立索引,然后将每个范围查询转换为多次布隆过滤操作

测试

- 负载1:YCSB key-value workloads that are variations of Workload E

- 指标:latency、CPU cost、内存占用、FPR

- 负载2:多种真实负载,变长字符串数据集 Wikipedia Extraction

- We use a variable-length string data set, Wikipedia Extraction (WEX)9 comprising of a processed dump (of size 6M) of English language in Wikipedia

- We generate 50 million (50 × 106) keys each of size 64 bits (and 512 byte values) using both uniform, and normal distributions.

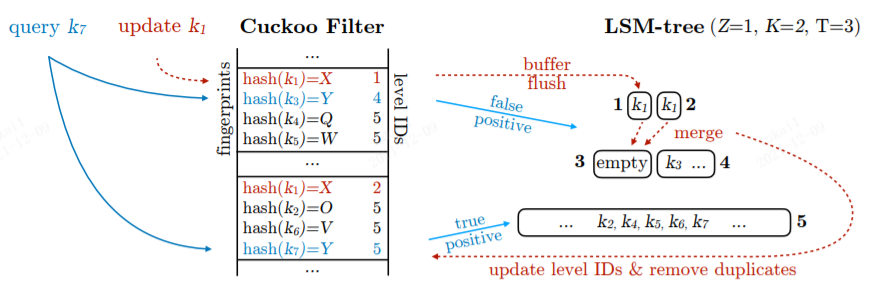

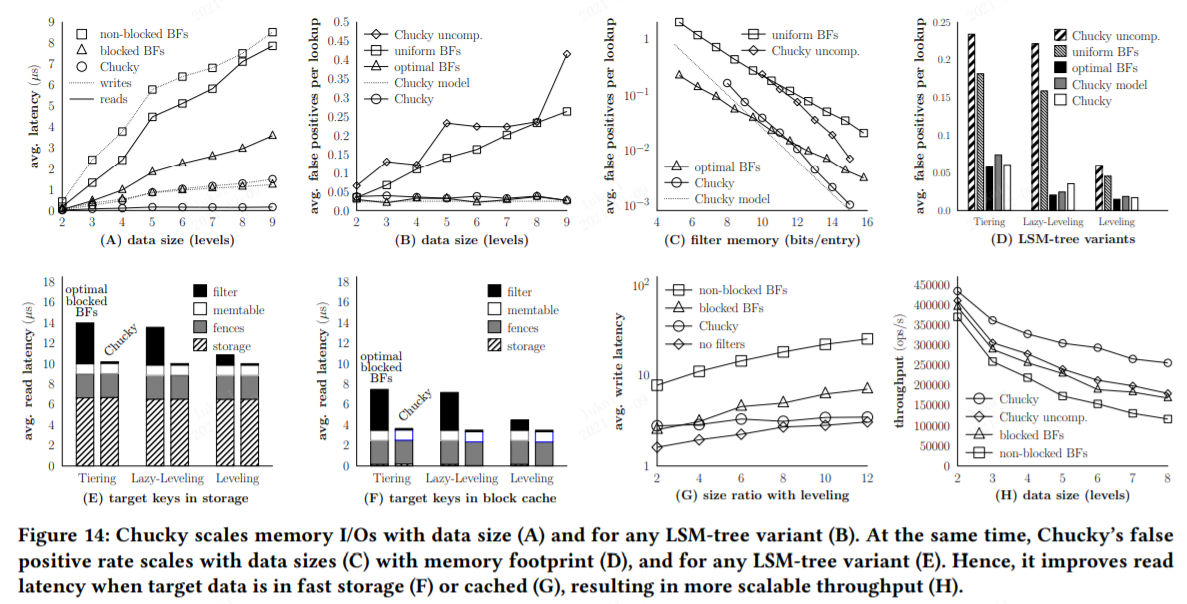

论文1-3 Chucky: A Succinct Cuckoo Filter for LSM-Tree

- SIGMOD’21,哈佛Stratos Idreos团队

- 关注点:高性能介质的使用,Bloom filter引起的开销不容忽视

- 设计:Succinct + Cuckoo Filter

- Chucky提出用单个Succinct Cuckoo Filter替代LSM-Tree中的多个Bloom filter,可以有效减少查找引起的开销

- 测试:负载YCSB

- 指标:Memory I/O Scalability、FPR Scalability、Data in Storage vs. Memory、End-to-End Write Cost

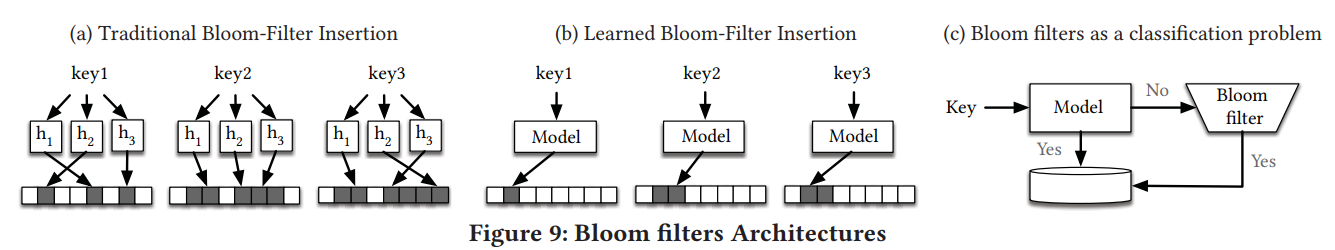

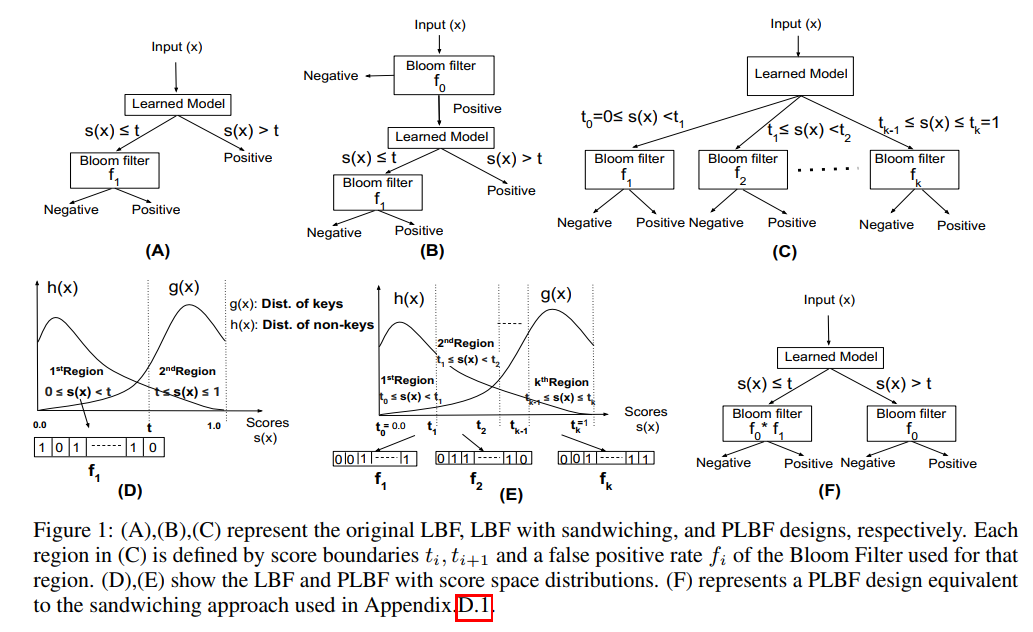

论文2-1 A Model for Learned Bloom Filters, and Optimizing by Sandwiching

NeurIPS 2018,一个作者,哈佛 Michael Mitzenmacher

原始Learned Bloom Filter

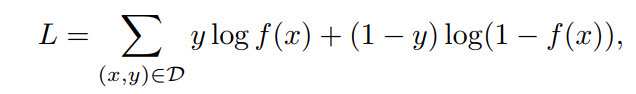

We then train a model with

that is, they suggest using a neural network on this binary classification task to produce a probability, based on minimizing the log loss function

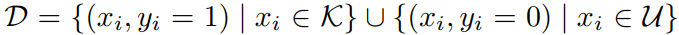

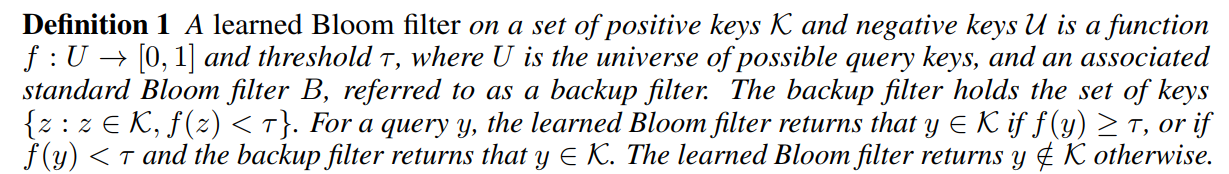

定义

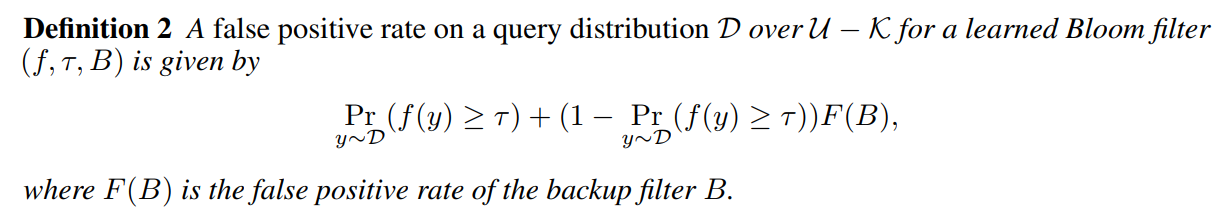

FPR的评估

- 与标准的布隆过滤器不同,其高度依赖于查询集,并且没有独立于查询进行很好的定义

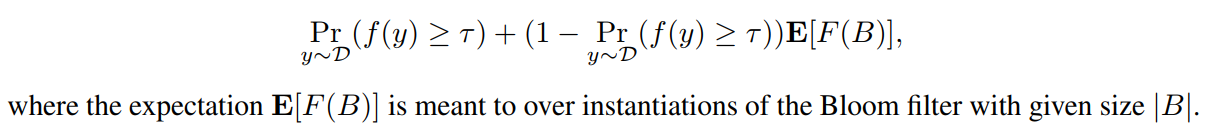

虽然F(B)本身是一个随机变量,但FPR很集中它的期望,这只取决于过滤器 B 的大小和错误否定的数量从必须存储在过滤器中的K,这取决于f:

关于non-key

- An assumption in this framework is that the training sample distribution needs to match or be close to the test distribution of non-keys. For many applications, past workloads or historical data can be used to get an appropriate non-key sample.

- 训练样本分布需要匹配或接近non-key的测试分布,对于许多应用程序,可以使用过去的工作负载或历史数据来获得适当的non-key示例

- 证明:Given sufficient data, we can determine an empirical false positive rate on a test set, and use that to predict future behavior. Under the assumption that the test set has the same distribution as future queries, standard Chernoff bounds provide that the empirical false positive rate will be close to the false positive rate on future queries, as both will be concentrated around the expectation. In many learning theory settings, this empirical false positive rate appears to be referred to as simply the false positive rate; we emphasize that false positive rate, as we have explained above, typically means something different in the Bloom filter literature

- ing

- 除了使用一个后置布隆过滤器外,三明治(Sandwiching)结构还使用了一个前置布隆过滤器

- 由于后置布隆过滤器的大小与通过RNN模型的假阴性元素数量呈正相关,所以通过使用前置布隆过滤器消除更多的假阴性, 能够降低后置布隆过滤器的空间代价

- 三明治结构的另一个优点是它与Kraska等人提出的学习布隆过滤器结构相比具有更强的鲁棒性。如果用于学习布隆过滤器的训练集和测试集具有不同的数据分布,那么RNN模型的FNR可能远远大于预期。增加一个前置布隆过滤器能够缓解这个问题,因为它预先过滤了一部分假阴性元素

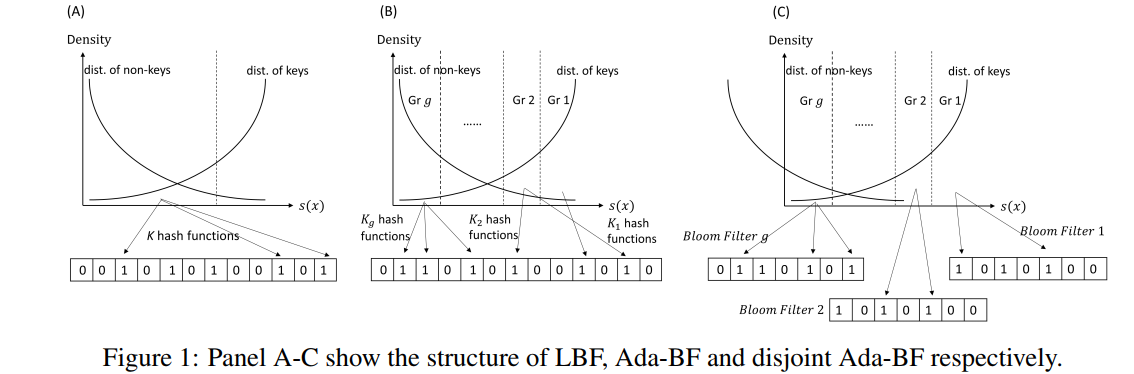

论文2-2 Adaptive Learned Bloom Filter (Ada-BF)

NeurIPS 2020

key分布如何求得?non-key的选取?

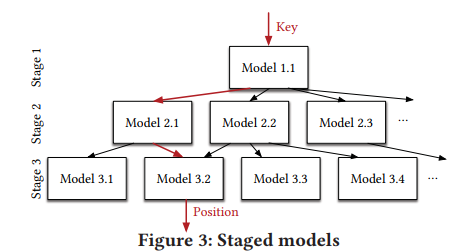

论文2-3 The Case for Learned Index Structures && Partition Learned Bloom Filter

- Tim Kraska,Jeffrey Dean

其他论文

Hash Adaptive Bloom Filter, ICDE‘21

- Compressing (Multidimensional) Learned Bloom Filters,NeurIPS workshop 2020

- Meta-Learning Neural Bloom Filters,ICML’19

- Learned FBF: Learning-Based Functional Bloom Filter for Key–Value Storage,TOC’21

问题总结

- Range Filter

- Rosetta:无法避免数据的探查与探查结果的合并开销,不适合长范围查询场景

- Surf:构建新结构,插入新数据时索引重构开销较大,Succinct 结构性能低下

- Chucky:不支持range query

- Learned Filter

- 优势:感知数据模式,精度高,体积小

- 劣势:不支持range query,模型精度随数据不定,不支持动态插入和更新

- SNARF: A Learning-Enhanced Range Filter

设计与测试

- Learned + Range Filter两则结合

Filter的设计

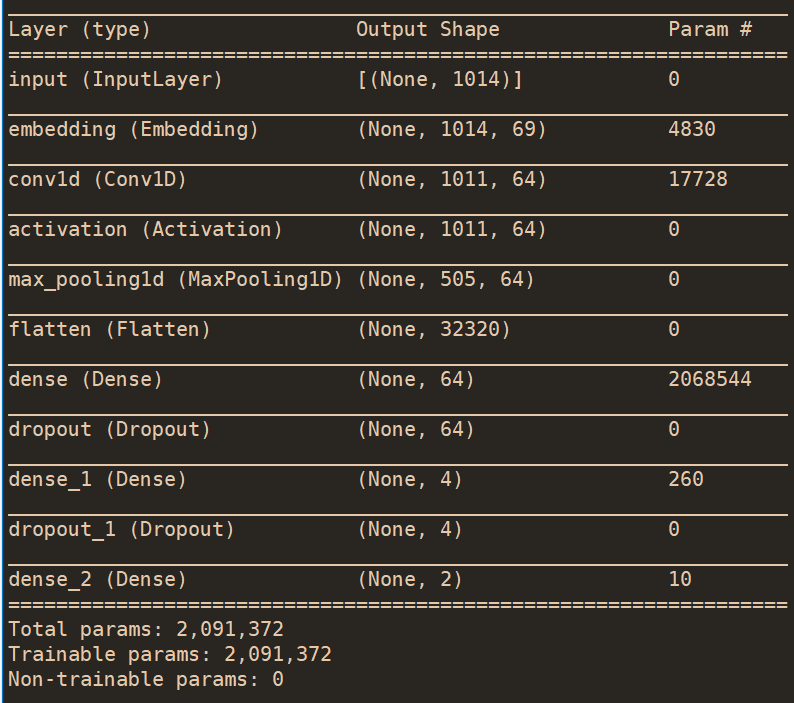

Learned Model: 二分类问题

- f(x)的选择:RMI、Lr、Plr、SVM、CART、CNN、RNN

- 理论证明:FPR的评估?

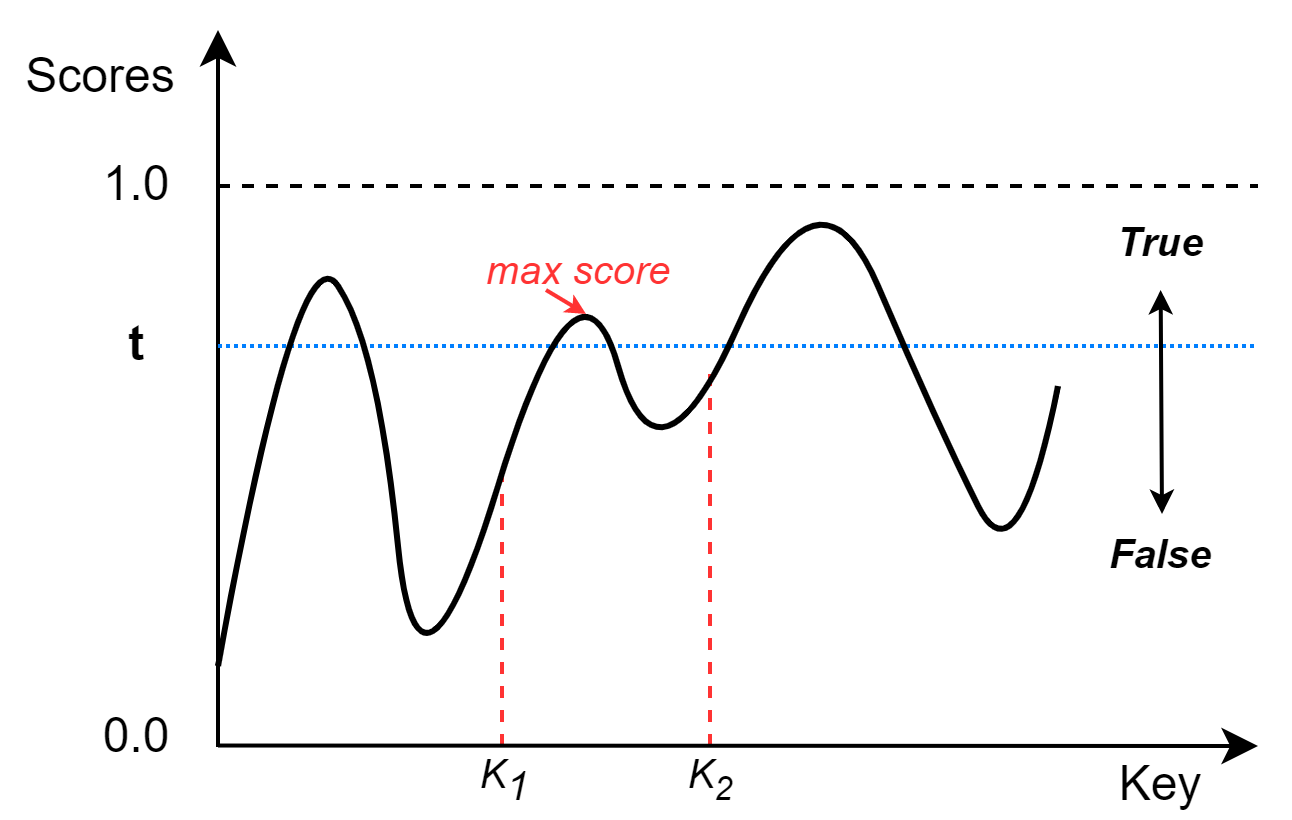

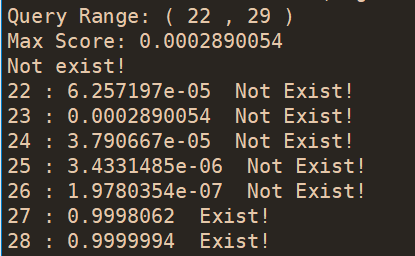

LRF设计1:(算法问题)求f(x)在范围内的最大值

合不合理,可不可求:需要数学公式推导/证明

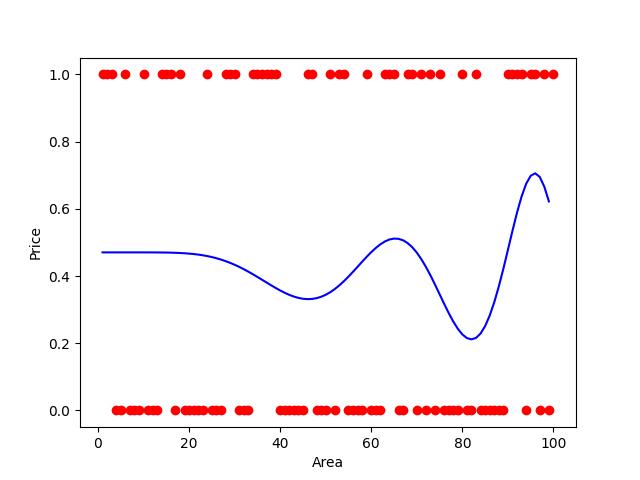

LRF设计2:(插值问题)绘制Key-Score 的映射,判断范围内最高score是否大于t;例子: (K1, k2)样本选择:正向样本:keys in the SStable;负向样本:non-keys的生成?区域最大值(极大值):求导Spline插值 & 多项式回归

其他方法?

- Kernel Density Estimation(KDE)拟合

In KV Store

出发点

- 基于NVMe SSD,原来bloom filter成为瓶颈

- 不支持范围查询

- 数据感知

设计:每个SStable配备,只读数据符合Learned index需求

模型和string处理, 能否结合partition learned bloom filter / Rocksdb partition bloom filtre?

- Rocksdb partition bloom filter:full filter存储方式,可以把filter block分片为多个更小的blocks,以降低block cache的压力

Range Filter问题:合并开销优化, 长范围查询优化

Key-range partition and garbage collection

测试

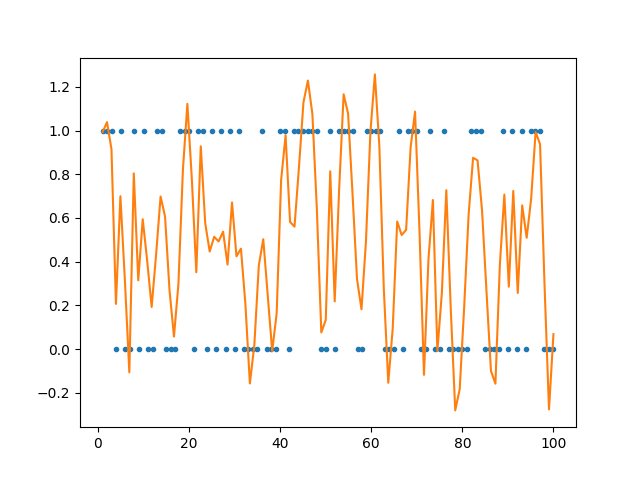

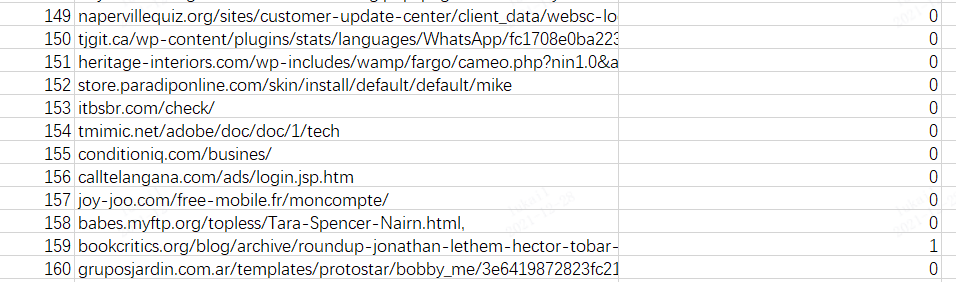

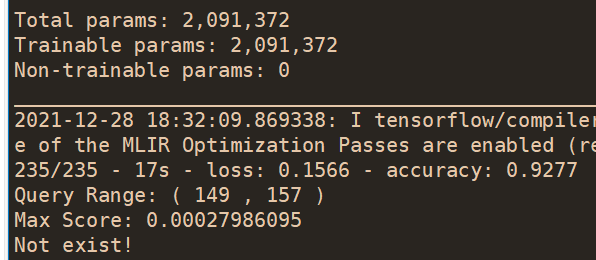

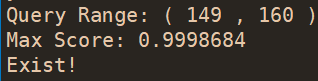

LBF的实现,尝试Lr、SVM、CNN、RNN (LSTM、GRU)、RMI

URL数据,CNN,模型25M,精度0.972

DB_bench数据

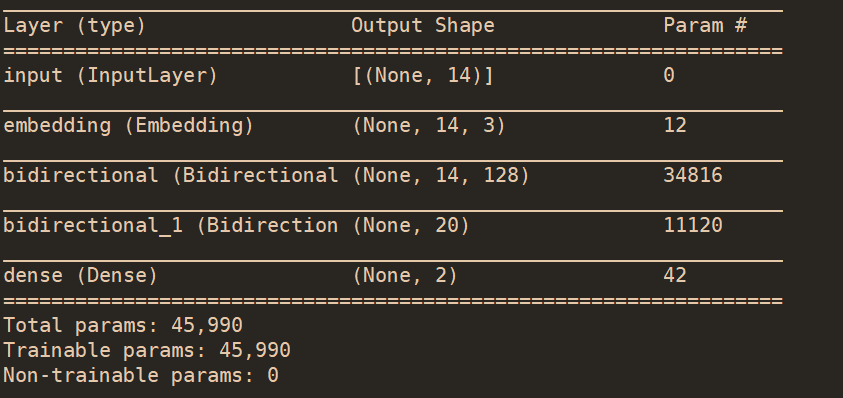

- X:0~10000,y~[0,1],五千个0和五千个1

- lr:0.453;SVM:0.562;RF:0.503;CNN:0.693;LSTM:0.706;双向LSTM:0.834

- 双层双向LSTM,模型5.4M,精度0.997

求极值

方法1:scipy.optimize.minimize

方法2:梯度下降法

方法3:变成训练过程

- 神经网络训练过程就是求最值,loss最小

- 输入(x,y,z),参数(a,b,c),模型 ax + by + cz

训练过程:寻找使得 loss = pre - y 最小的(a,b,c) - 现在的情况:模型(a,b,c)是固定的,寻找使得 pre 最小的 (x,y,z)

- 原论文代码测试

- 模型:GRU

- 数据集:网址数据

1. Bloom Filter

Bits needed 14293028

Hash functions needed 6

Tast False positives 0.010326521200924817

2. GRU

Params needed 2545

Bloom filter bits needed 7308941

Total bits needed 7311486

Test False positive rate: 0.010351505356869193

范围取样

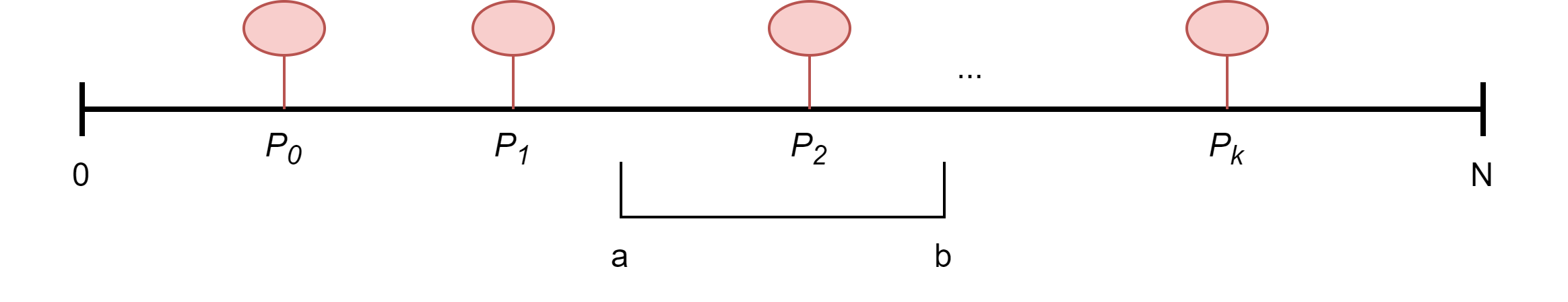

- 采蘑菇问题

在一维度坐标[0, N]上按照函数f(x)位置种下k个蘑菇,坐标分别为P0,P1,…,Pk,其中Pi = f(i);

一个农民在(a, b)范围内采蘑菇,a与b任意取值,0<= a < b <= N,需要判断该农民能否采到蘑菇;

即如何构建布尔函数g(a,b),若g = 1,表示可以采到蘑菇;g = 0,不能采到。

数据:num0: 1703 num1: 178090

num0: 10509 num1: 199281

预测:num-1: 0 num0: 179128 num1: 665

num-1: 607 num0: 169174 num1: 10012

LR模型

num0: 10504 num1: 199286 num-1: 0 num0: 209785 num1: 5

Next

- Range Filter

- 理论支持?

- non-key数据?

- Range Filter如何实现?

- 测试

- LBF其他模型测试,单独用lr精度过低

- LBF用于Rocksdb + 其他数据集测试

Surf测试

1. 不用filter

单点查询

throughput: 778.53 rocksdb.db.get.micros statistics Percentiles :=> 50 : 1192.427183 95 : 2191.246016 99 : 2813.883330 100 : 18167.000000 rocksdb.block.cache.miss COUNT : 309894253 rocksdb.block.cache.hit COUNT : 1204937 rocksdb.block.cache.add COUNT : 6935340 rocksdb.block.cache.add.failures COUNT : 0 rocksdb.block.cache.index.miss COUNT : 1428195 rocksdb.block.cache.index.hit COUNT : 1055823 rocksdb.block.cache.index.add COUNT : 1428195 rocksdb.block.cache.index.bytes.insert COUNT : 605603079464 rocksdb.block.cache.index.bytes.evict COUNT : 605518338384 rocksdb.block.cache.filter.miss COUNT : 0 rocksdb.block.cache.filter.hit COUNT : 0 rocksdb.block.cache.filter.add COUNT : 0 rocksdb.block.cache.filter.bytes.insert COUNT : 0 rocksdb.block.cache.filter.bytes.evict COUNT : 0 rocksdb.block.cache.data.miss COUNT : 308466058 rocksdb.block.cache.data.hit COUNT : 149114 rocksdb.block.cache.data.add COUNT : 5507145 rocksdb.block.cache.data.bytes.insert COUNT : 23038163432 rocksdb.block.cache.bytes.read COUNT : 391096497672 rocksdb.block.cache.bytes.write COUNT : 628641242896 rocksdb.bloom.filter.useful COUNT : 0 rocksdb.persistent.cache.hit COUNT : 0 rocksdb.persistent.cache.miss COUNT : 0 rocksdb.sim.block.cache.hit COUNT : 0 rocksdb.sim.block.cache.miss COUNT : 0 rocksdb.memtable.hit COUNT : 575 rocksdb.memtable.miss COUNT : 1049425 rocksdb.l0.hit COUNT : 1840 rocksdb.l1.hit COUNT : 2311 rocksdb.l2andup.hit COUNT : 995274 rocksdb.compaction.key.drop.new COUNT : 412 rocksdb.compaction.key.drop.obsolete COUNT : 0 rocksdb.compaction.key.drop.range_del COUNT : 0 rocksdb.compaction.key.drop.user COUNT : 0 rocksdb.compaction.range_del.drop.obsolete COUNT : 0 rocksdb.compaction.optimized.del.drop.obsolete COUNT : 0 rocksdb.number.keys.written COUNT : 100000000 rocksdb.number.keys.read COUNT : 1050000 rocksdb.number.keys.updated COUNT : 0 rocksdb.bytes.written COUNT : 104800000000 rocksdb.bytes.read COUNT : 1024000000 rocksdb.number.db.seek COUNT : 0 rocksdb.number.db.next COUNT : 0 rocksdb.number.db.prev COUNT : 0 rocksdb.number.db.seek.found COUNT : 0 rocksdb.number.db.next.found COUNT : 0 rocksdb.number.db.prev.found COUNT : 0 rocksdb.db.iter.bytes.read COUNT : 0 rocksdb.no.file.closes COUNT : 0 rocksdb.no.file.opens COUNT : 41216 rocksdb.no.file.errors COUNT : 0 rocksdb.l0.slowdown.micros COUNT : 0 rocksdb.memtable.compaction.micros COUNT : 0 rocksdb.l0.num.files.stall.micros COUNT : 0 rocksdb.stall.micros COUNT : 2041476131 rocksdb.db.mutex.wait.micros COUNT : 0 rocksdb.rate.limit.delay.millis COUNT : 0 rocksdb.num.iterators COUNT : 0 rocksdb.number.multiget.get COUNT : 0 rocksdb.number.multiget.keys.read COUNT : 0 rocksdb.number.multiget.bytes.read COUNT : 0 rocksdb.number.deletes.filtered COUNT : 0 rocksdb.number.merge.failures COUNT : 0 rocksdb.bloom.filter.prefix.checked COUNT : 0 rocksdb.bloom.filter.prefix.useful COUNT : 0 rocksdb.number.reseeks.iteration COUNT : 0 rocksdb.getupdatessince.calls COUNT : 0 rocksdb.block.cachecompressed.miss COUNT : 0 rocksdb.block.cachecompressed.hit COUNT : 0 rocksdb.block.cachecompressed.add COUNT : 0 rocksdb.block.cachecompressed.add.failures COUNT : 0 rocksdb.wal.synced COUNT : 0 rocksdb.wal.bytes COUNT : 104800000000 rocksdb.write.self COUNT : 100000000 rocksdb.write.other COUNT : 0 rocksdb.write.timeout COUNT : 0 rocksdb.write.wal COUNT : 200000000 rocksdb.compact.read.bytes COUNT : 1301603543827 rocksdb.compact.write.bytes COUNT : 1272673781248 rocksdb.flush.write.bytes COUNT : 105017065602 rocksdb.number.direct.load.table.properties COUNT : 0 rocksdb.number.superversion_acquires COUNT : 310 rocksdb.number.superversion_releases COUNT : 307 rocksdb.number.superversion_cleanups COUNT : 305 rocksdb.number.block.compressed COUNT : 0 rocksdb.number.block.decompressed COUNT : 0 rocksdb.number.block.not_compressed COUNT : 0 rocksdb.merge.operation.time.nanos COUNT : 0 rocksdb.filter.operation.time.nanos COUNT : 0 rocksdb.row.cache.hit COUNT : 0 rocksdb.row.cache.miss COUNT : 0 rocksdb.read.amp.estimate.useful.bytes COUNT : 0 rocksdb.read.amp.total.read.bytes COUNT : 0 rocksdb.number.rate_limiter.drains COUNT : 0 rocksdb.db.get.micros statistics Percentiles :=> 50 : 1192.427183 95 : 2191.246016 99 : 2813.883330 100 : 18167.000000 rocksdb.db.write.micros statistics Percentiles :=> 50 : 5.057838 95 : 20.650113 99 : 1077.627671 100 : 9253.000000 rocksdb.compaction.times.micros statistics Percentiles :=> 50 : 818358.974359 95 : 1890631.313131 99 : 6125120.000000 100 : 7993369.000000 rocksdb.subcompaction.setup.times.micros statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.table.sync.micros statistics Percentiles :=> 50 : 365.655172 95 : 947.844828 99 : 2357.894737 100 : 3274.000000 rocksdb.compaction.outfile.sync.micros statistics Percentiles :=> 50 : 282.647026 95 : 677.332593 99 : 1926.250000 100 : 29137.000000 rocksdb.wal.file.sync.micros statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.manifest.file.sync.micros statistics Percentiles :=> 50 : 294.953460 95 : 526.962209 99 : 744.476136 100 : 5748.000000 rocksdb.table.open.io.micros statistics Percentiles :=> 50 : 826.696150 95 : 1597.548845 99 : 1890.380107 100 : 14988.000000 rocksdb.db.multiget.micros statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.read.block.compaction.micros statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.read.block.get.micros statistics Percentiles :=> 50 : 102.342230 95 : 163.463288 99 : 169.834521 100 : 8715.000000 rocksdb.write.raw.block.micros statistics Percentiles :=> 50 : 0.618389 95 : 2.123947 99 : 3.862707 100 : 69230.000000 rocksdb.l0.slowdown.count statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.memtable.compaction.count statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.num.files.stall.count statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.hard.rate.limit.delay.count statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.soft.rate.limit.delay.count statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.numfiles.in.singlecompaction statistics Percentiles :=> 50 : 1.000000 95 : 1.141121 99 : 17.197391 100 : 26.000000 rocksdb.db.seek.micros statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.db.write.stall statistics Percentiles :=> 50 : 0.528350 95 : 898.708763 99 : 1220.016908 100 : 6072.000000 rocksdb.sst.read.micros statistics Percentiles :=> 50 : 111.179925 95 : 519.101450 99 : 638.471213 100 : 14061.000000 rocksdb.num.subcompactions.scheduled statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.bytes.per.read statistics Percentiles :=> 50 : 1024.000000 95 : 1024.000000 99 : 1024.000000 100 : 1024.000000 rocksdb.bytes.per.write statistics Percentiles :=> 50 : 1048.000000 95 : 1048.000000 99 : 1048.000000 100 : 1048.000000 rocksdb.bytes.per.multiget statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.bytes.compressed statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.bytes.decompressed statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.compression.times.nanos statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.decompression.times.nanos statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.read.num.merge_operands statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000

** Compaction Stats [default] **

Level Files Size Score Read(GB) Rn(GB) Rnp1(GB) Write(GB) Wnew(GB) Moved(GB) W-Amp Rd(MB/s) Wr(MB/s) Comp(sec) Comp(cnt) Avg(sec) KeyIn KeyDrop

L0 3/0 184.33 MB 0.8 0.0 0.0 0.0 97.8 97.8 0.0 1.0 0.0 476.6 210 1630 0.129 0 0 L1 5/0 233.37 MB 0.9 193.2 97.6 95.6 193.2 97.6 0.0 2.0 415.4 415.4 476 111 4.290 197M 20 L2 54/0 2.46 GB 1.0 534.3 96.9 437.5 534.3 96.8 0.5 5.5 395.8 395.8 1382 1522 0.908 546M 97 L3 403/0 24.96 GB 1.0 405.0 78.3 326.6 405.0 78.3 16.5 5.2 405.8 405.8 1022 1180 0.866 414M 256 L4 1117/0 69.92 GB 0.3 52.8 20.8 32.0 52.8 20.8 49.1 2.5 385.4 385.4 140 313 0.448 53M 39 Sum 1582/0 97.75 GB 0.0 1185.3 293.6 891.7 1283.1 391.4 66.2 13.1 375.7 406.7 3231 4756 0.679 1211M 412 Int 0/0 0.00 KB 0.0 11.4 2.5 8.9 11.4 2.5 3.3 12256922651.0 389.1 389.1 30 39 0.770 11M 8 Uptime(secs): 4250.6 total, 1236.5 interval Flush(GB): cumulative 97.804, interval 0.000 AddFile(GB): cumulative 0.000, interval 0.000 AddFile(Total Files): cumulative 0, interval 0 AddFile(L0 Files): cumulative 0, interval 0 AddFile(Keys): cumulative 0, interval 0 Cumulative compaction: 1283.06 GB write, 309.10 MB/s write, 1185.31 GB read, 285.55 MB/s read, 3230.8 seconds Interval compaction: 11.42 GB write, 9.45 MB/s write, 11.42 GB read, 9.45 MB/s read, 30.0 seconds Stalls(count): 1373 level0_slowdown, 96 level0_slowdown_with_compaction, 0 level0_numfiles, 0 level0_numfiles_with_compaction, 0 stop for pending_compaction_bytes, 3130 slowdown for pending_compaction_bytes, 0 memtable_compaction, 0 memtable_slowdown, interval 0 total count

** File Read Latency Histogram By Level [default] ** ** Level 0 read latency histogram (micros): Count: 3050773 Average: 101.1093 StdDev: 33.87 Min: 67 Median: 101.2603 Max: 8710

Percentiles: P50: 101.26 P75: 130.28 P99: 169.79 P99.9: 501.99 P99.99: 1041.59

( 51, 76 ] 407209 13.348% 13.348% ### ( 76, 110 ] 1505048 49.333% 62.681% ########## ( 110, 170 ] 1111908 36.447% 99.128% ####### ( 170, 250 ] 14362 0.471% 99.599% ( 250, 380 ] 7072 0.232% 99.830% ( 380, 580 ] 3481 0.114% 99.945% ( 580, 870 ] 1271 0.042% 99.986% ( 870, 1300 ] 293 0.010% 99.996% ( 1300, 1900 ] 80 0.003% 99.998% ( 1900, 2900 ] 37 0.001% 100.000% ( 2900, 4400 ] 11 0.000% 100.000% ( 6600, 9900 ] 1 0.000% 100.000%

** Level 1 read latency histogram (micros): Count: 408588 Average: 113.3401 StdDev: 64.77 Min: 21 Median: 105.6646 Max: 5478

Percentiles: P50: 105.66 P75: 139.20 P99: 467.08 P99.9: 655.97 P99.99: 1349.41

( 15, 22 ] 1 0.000% 0.000% ( 22, 34 ] 2 0.000% 0.001% ( 34, 51 ] 9 0.002% 0.003% ( 51, 76 ] 39530 9.675% 9.678% ## ( 76, 110 ] 188830 46.215% 55.893% ######### ( 110, 170 ] 160402 39.258% 95.151% ######## ( 170, 250 ] 4547 1.113% 96.263% ( 250, 380 ] 8428 2.063% 98.326% ( 380, 580 ] 6323 1.548% 99.874% ( 580, 870 ] 410 0.100% 99.974% ( 870, 1300 ] 63 0.015% 99.989% ( 1300, 1900 ] 26 0.006% 99.996% ( 1900, 2900 ] 10 0.002% 99.998% ( 2900, 4400 ] 6 0.001% 100.000% ( 4400, 6600 ] 1 0.000% 100.000%

** Level 2 read latency histogram (micros): Count: 759492 Average: 146.4239 StdDev: 119.45 Min: 20 Median: 107.4768 Max: 14061

Percentiles: P50: 107.48 P75: 154.66 P99: 564.24 P99.9: 855.17 P99.99: 2441.82

( 15, 22 ] 3 0.000% 0.000% ( 22, 34 ] 14 0.002% 0.002% ( 34, 51 ] 36 0.005% 0.007% ( 51, 76 ] 78836 10.380% 10.387% ## ( 76, 110 ] 324974 42.788% 53.175% ######### ( 110, 170 ] 222695 29.322% 82.497% ###### ( 170, 250 ] 19860 2.615% 85.112% # ( 250, 380 ] 58206 7.664% 92.776% ## ( 380, 580 ] 51317 6.757% 99.532% # ( 580, 870 ] 2942 0.387% 99.920% ( 870, 1300 ] 406 0.053% 99.973% ( 1300, 1900 ] 94 0.012% 99.986% ( 1900, 2900 ] 61 0.008% 99.994% ( 2900, 4400 ] 40 0.005% 99.999% ( 4400, 6600 ] 6 0.001% 100.000% ( 6600, 9900 ] 1 0.000% 100.000% ( 14000, 22000 ] 1 0.000% 100.000%

** Level 3 read latency histogram (micros): Count: 1860592 Average: 255.4789 StdDev: 183.81 Min: 28 Median: 158.8238 Max: 13596

Percentiles: P50: 158.82 P75: 426.17 P99: 787.92 P99.9: 1203.28 P99.99: 2744.80

( 22, 34 ] 5 0.000% 0.000% ( 34, 51 ] 14 0.001% 0.001% ( 51, 76 ] 99110 5.327% 5.328% # ( 76, 110 ] 525849 28.262% 33.590% ###### ( 110, 170 ] 375208 20.166% 53.756% #### ( 170, 250 ] 7241 0.389% 54.146% ( 250, 380 ] 264664 14.225% 68.370% ### ( 380, 580 ] 534359 28.720% 97.090% ###### ( 580, 870 ] 49564 2.664% 99.754% # ( 870, 1300 ] 3506 0.188% 99.942% ( 1300, 1900 ] 657 0.035% 99.978% ( 1900, 2900 ] 271 0.015% 99.992% ( 2900, 4400 ] 135 0.007% 100.000% ( 4400, 6600 ] 2 0.000% 100.000% ( 6600, 9900 ] 1 0.000% 100.000% ( 9900, 14000 ] 6 0.000% 100.000%

** Level 4 read latency histogram (micros): Count: 857472 Average: 267.4790 StdDev: 181.75 Min: 21 Median: 228.9559 Max: 13714

Percentiles: P50: 228.96 P75: 436.88 P99: 785.86 P99.9: 1170.02 P99.99: 2847.70

( 15, 22 ] 1 0.000% 0.000% ( 22, 34 ] 2 0.000% 0.000% ( 34, 51 ] 1 0.000% 0.000% ( 51, 76 ] 36309 4.234% 4.235% # ( 76, 110 ] 222593 25.959% 30.194% ##### ( 110, 170 ] 168009 19.594% 49.788% #### ( 170, 250 ] 2471 0.288% 50.076% ( 250, 380 ] 138488 16.151% 66.227% ### ( 380, 580 ] 264517 30.848% 97.075% ###### ( 580, 870 ] 23253 2.712% 99.787% # ( 870, 1300 ] 1391 0.162% 99.949% ( 1300, 1900 ] 229 0.027% 99.976% ( 1900, 2900 ] 129 0.015% 99.991% ( 2900, 4400 ] 77 0.009% 100.000% ( 9900, 14000 ] 2 0.000% 100.000%

** DB Stats ** Uptime(secs): 4250.6 total, 1236.5 interval Cumulative writes: 100M writes, 100M keys, 100M commit groups, 1.0 writes per commit group, ingest: 97.60 GB, 23.51 MB/s Cumulative WAL: 100M writes, 0 syncs, 100000000.00 writes per sync, written: 97.60 GB, 23.51 MB/s Cumulative stall: 00:34:1.476 H:M:S, 48.0 percent Interval writes: 0 writes, 0 keys, 0 commit groups, 0.0 writes per commit group, ingest: 0.00 MB, 0.00 MB/s Interval WAL: 0 writes, 0 syncs, 0.00 writes per sync, written: 0.00 MB, 0.00 MB/s Interval stall: 00:00:0.000 H:M:S, 0.0 percent

10339690501 6002 192210247282 2245074190 1013077194 226596 124515442891 2584834989 0 195847054 631303944

I/O count: 547295

范围查询 closed

Filter DISABLED No Compression closed range query throughput: 780.034 rocksdb.db.seek.micros statistics Percentiles :=> 50 : 1259.090241 95 : 2094.587629 99 : 2738.917526 100 : 2871.000000 rocksdb.block.cache.miss COUNT : 4323025 rocksdb.block.cache.hit COUNT : 1506810 rocksdb.block.cache.add COUNT : 4323025 rocksdb.block.cache.add.failures COUNT : 0 rocksdb.block.cache.index.miss COUNT : 1427187 rocksdb.block.cache.index.hit COUNT : 1442487 rocksdb.block.cache.index.add COUNT : 1427187 rocksdb.block.cache.index.bytes.insert COUNT : 606839608776 rocksdb.block.cache.index.bytes.evict COUNT : 606753687720 rocksdb.block.cache.filter.miss COUNT : 0 rocksdb.block.cache.filter.hit COUNT : 0 rocksdb.block.cache.filter.add COUNT : 0 rocksdb.block.cache.filter.bytes.insert COUNT : 0 rocksdb.block.cache.filter.bytes.evict COUNT : 0 rocksdb.block.cache.data.miss COUNT : 2895838 rocksdb.block.cache.data.hit COUNT : 64323 rocksdb.block.cache.data.add COUNT : 2895838 rocksdb.block.cache.data.bytes.insert COUNT : 12110899712 rocksdb.block.cache.bytes.read COUNT : 560821193152 rocksdb.block.cache.bytes.write COUNT : 618950508488 rocksdb.bloom.filter.useful COUNT : 0 rocksdb.persistent.cache.hit COUNT : 0 rocksdb.persistent.cache.miss COUNT : 0 rocksdb.sim.block.cache.hit COUNT : 0 rocksdb.sim.block.cache.miss COUNT : 0 rocksdb.memtable.hit COUNT : 0 rocksdb.memtable.miss COUNT : 1000000 rocksdb.l0.hit COUNT : 0 rocksdb.l1.hit COUNT : 2132 rocksdb.l2andup.hit COUNT : 997868 rocksdb.compaction.key.drop.new COUNT : 0 rocksdb.compaction.key.drop.obsolete COUNT : 0 rocksdb.compaction.key.drop.range_del COUNT : 0 rocksdb.compaction.key.drop.user COUNT : 0 rocksdb.compaction.range_del.drop.obsolete COUNT : 0 rocksdb.compaction.optimized.del.drop.obsolete COUNT : 0 rocksdb.number.keys.written COUNT : 0 rocksdb.number.keys.read COUNT : 1000000 rocksdb.number.keys.updated COUNT : 0 rocksdb.bytes.written COUNT : 0 rocksdb.bytes.read COUNT : 1024000000 rocksdb.number.db.seek COUNT : 50000 rocksdb.number.db.next COUNT : 0 rocksdb.number.db.prev COUNT : 0 rocksdb.number.db.seek.found COUNT : 24956 rocksdb.number.db.next.found COUNT : 0 rocksdb.number.db.prev.found COUNT : 0 rocksdb.db.iter.bytes.read COUNT : 25754592 rocksdb.no.file.closes COUNT : 0 rocksdb.no.file.opens COUNT : 1583 rocksdb.no.file.errors COUNT : 0 rocksdb.l0.slowdown.micros COUNT : 0 rocksdb.memtable.compaction.micros COUNT : 0 rocksdb.l0.num.files.stall.micros COUNT : 0 rocksdb.stall.micros COUNT : 0 rocksdb.db.mutex.wait.micros COUNT : 0 rocksdb.rate.limit.delay.millis COUNT : 0 rocksdb.num.iterators COUNT : 0 rocksdb.number.multiget.get COUNT : 0 rocksdb.number.multiget.keys.read COUNT : 0 rocksdb.number.multiget.bytes.read COUNT : 0 rocksdb.number.deletes.filtered COUNT : 0 rocksdb.number.merge.failures COUNT : 0 rocksdb.bloom.filter.prefix.checked COUNT : 0 rocksdb.bloom.filter.prefix.useful COUNT : 0 rocksdb.number.reseeks.iteration COUNT : 0 rocksdb.getupdatessince.calls COUNT : 0 rocksdb.block.cachecompressed.miss COUNT : 0 rocksdb.block.cachecompressed.hit COUNT : 0 rocksdb.block.cachecompressed.add COUNT : 0 rocksdb.block.cachecompressed.add.failures COUNT : 0 rocksdb.wal.synced COUNT : 0 rocksdb.wal.bytes COUNT : 0 rocksdb.write.self COUNT : 0 rocksdb.write.other COUNT : 0 rocksdb.write.timeout COUNT : 0 rocksdb.write.wal COUNT : 0 rocksdb.compact.read.bytes COUNT : 0 rocksdb.compact.write.bytes COUNT : 0 rocksdb.flush.write.bytes COUNT : 0 rocksdb.number.direct.load.table.properties COUNT : 0 rocksdb.number.superversion_acquires COUNT : 1 rocksdb.number.superversion_releases COUNT : 0 rocksdb.number.superversion_cleanups COUNT : 0 rocksdb.number.block.compressed COUNT : 0 rocksdb.number.block.decompressed COUNT : 0 rocksdb.number.block.not_compressed COUNT : 0 rocksdb.merge.operation.time.nanos COUNT : 0 rocksdb.filter.operation.time.nanos COUNT : 0 rocksdb.row.cache.hit COUNT : 0 rocksdb.row.cache.miss COUNT : 0 rocksdb.read.amp.estimate.useful.bytes COUNT : 0 rocksdb.read.amp.total.read.bytes COUNT : 0 rocksdb.number.rate_limiter.drains COUNT : 0 rocksdb.db.get.micros statistics Percentiles :=> 50 : 942.612488 95 : 1828.156979 99 : 2513.466334 100 : 4026.000000 rocksdb.db.write.micros statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.compaction.times.micros statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.subcompaction.setup.times.micros statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.table.sync.micros statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.compaction.outfile.sync.micros statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.wal.file.sync.micros statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.manifest.file.sync.micros statistics Percentiles :=> 50 : 399.000000 95 : 399.000000 99 : 399.000000 100 : 399.000000 rocksdb.table.open.io.micros statistics Percentiles :=> 50 : 1623.897059 95 : 2833.102493 99 : 7582.928571 100 : 13973.000000 rocksdb.db.multiget.micros statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.read.block.compaction.micros statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.read.block.get.micros statistics Percentiles :=> 50 : 104.072952 95 : 162.995106 99 : 168.827873 100 : 726.000000 rocksdb.write.raw.block.micros statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.l0.slowdown.count statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.memtable.compaction.count statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.num.files.stall.count statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.hard.rate.limit.delay.count statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.soft.rate.limit.delay.count statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.numfiles.in.singlecompaction statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.db.seek.micros statistics Percentiles :=> 50 : 1259.090241 95 : 2094.587629 99 : 2738.917526 100 : 2871.000000 rocksdb.db.write.stall statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.sst.read.micros statistics Percentiles :=> 50 : 131.922207 95 : 559.730739 99 : 771.025619 100 : 13008.000000 rocksdb.num.subcompactions.scheduled statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.bytes.per.read statistics Percentiles :=> 50 : 1024.000000 95 : 1024.000000 99 : 1024.000000 100 : 1024.000000 rocksdb.bytes.per.write statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.bytes.per.multiget statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.bytes.compressed statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.bytes.decompressed statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.compression.times.nanos statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.decompression.times.nanos statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.read.num.merge_operands statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000

** Compaction Stats [default] **

Level Files Size Score Read(GB) Rn(GB) Rnp1(GB) Write(GB) Wnew(GB) Moved(GB) W-Amp Rd(MB/s) Wr(MB/s) Comp(sec) Comp(cnt) Avg(sec) KeyIn KeyDrop

L1 4/0 218.11 MB 0.9 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0 0 0.000 0 0 L2 53/0 2.46 GB 1.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0 0 0.000 0 0 L3 405/0 24.96 GB 1.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0 0 0.000 0 0 L4 1121/0 70.17 GB 0.3 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0 0 0.000 0 0 Sum 1583/0 97.80 GB 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0 0 0.000 0 0 Int 0/0 0.00 KB 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0 0 0.000 0 0 Uptime(secs): 1072.3 total, 1072.3 interval Flush(GB): cumulative 0.000, interval 0.000 AddFile(GB): cumulative 0.000, interval 0.000 AddFile(Total Files): cumulative 0, interval 0 AddFile(L0 Files): cumulative 0, interval 0 AddFile(Keys): cumulative 0, interval 0 Cumulative compaction: 0.00 GB write, 0.00 MB/s write, 0.00 GB read, 0.00 MB/s read, 0.0 seconds Interval compaction: 0.00 GB write, 0.00 MB/s write, 0.00 GB read, 0.00 MB/s read, 0.0 seconds Stalls(count): 0 level0_slowdown, 0 level0_slowdown_with_compaction, 0 level0_numfiles, 0 level0_numfiles_with_compaction, 0 stop for pending_compaction_bytes, 0 slowdown for pending_compaction_bytes, 0 memtable_compaction, 0 memtable_slowdown, interval 0 total count

** File Read Latency Histogram By Level [default] ** ** Level 1 read latency histogram (micros): Count: 688067 Average: 104.8341 StdDev: 46.99 Min: 68 Median: 102.9612 Max: 13008

Percentiles: P50: 102.96 P75: 132.79 P99: 308.18 P99.9: 561.97 P99.99: 772.10

( 51, 76 ] 69178 10.054% 10.054% ## ( 76, 110 ] 346613 50.375% 60.429% ########## ( 110, 170 ] 264000 38.368% 98.797% ######## ( 170, 250 ] 417 0.061% 98.858% ( 250, 380 ] 2186 0.318% 99.176% ( 380, 580 ] 5479 0.796% 99.972% ( 580, 870 ] 189 0.027% 99.999% ( 870, 1300 ] 1 0.000% 99.999% ( 4400, 6600 ] 1 0.000% 100.000% ( 6600, 9900 ] 2 0.000% 100.000% ( 9900, 14000 ] 1 0.000% 100.000%

** Level 2 read latency histogram (micros): Count: 844987 Average: 135.5396 StdDev: 103.99 Min: 64 Median: 107.4353 Max: 12412

Percentiles: P50: 107.44 P75: 149.06 P99: 546.76 P99.9: 617.24 P99.99: 857.22

( 51, 76 ] 87782 10.389% 10.389% ## ( 76, 110 ] 362020 42.843% 53.232% ######### ( 110, 170 ] 282553 33.439% 86.671% ####### ( 170, 250 ] 16003 1.894% 88.564% ( 250, 380 ] 50617 5.990% 94.555% # ( 380, 580 ] 45049 5.331% 99.886% # ( 580, 870 ] 919 0.109% 99.995% ( 870, 1300 ] 6 0.001% 99.996% ( 1300, 1900 ] 1 0.000% 99.996% ( 1900, 2900 ] 12 0.001% 99.997% ( 2900, 4400 ] 5 0.001% 99.998% ( 4400, 6600 ] 7 0.001% 99.998% ( 6600, 9900 ] 11 0.001% 100.000% ( 9900, 14000 ] 2 0.000% 100.000%

** Level 3 read latency histogram (micros): Count: 1248302 Average: 243.9094 StdDev: 174.68 Min: 64 Median: 151.1579 Max: 3738

Percentiles: P50: 151.16 P75: 410.40 P99: 766.85 P99.9: 862.71 P99.99: 1812.93

( 51, 76 ] 67202 5.383% 5.383% # ( 76, 110 ] 363627 29.130% 34.513% ###### ( 110, 170 ] 281825 22.577% 57.090% ##### ( 170, 250 ] 4104 0.329% 57.419% ( 250, 380 ] 169689 13.594% 71.012% ### ( 380, 580 ] 327475 26.234% 97.246% ##### ( 580, 870 ] 33986 2.723% 99.968% # ( 870, 1300 ] 117 0.009% 99.978% ( 1300, 1900 ] 178 0.014% 99.992% ( 1900, 2900 ] 93 0.007% 100.000% ( 2900, 4400 ] 6 0.000% 100.000%

** Level 4 read latency histogram (micros): Count: 1543252 Average: 285.9925 StdDev: 195.82 Min: 65 Median: 169.8734 Max: 3911

Percentiles: P50: 169.87 P75: 469.17 P99: 822.55 P99.9: 869.04 P99.99: 2274.67

( 51, 76 ] 58795 3.810% 3.810% # ( 76, 110 ] 384037 24.885% 28.695% ##### ( 110, 170 ] 329489 21.350% 50.045% #### ( 170, 250 ] 3077 0.199% 50.244% ( 250, 380 ] 142341 9.223% 59.468% ## ( 380, 580 ] 537614 34.836% 94.304% ####### ( 580, 870 ] 86644 5.614% 99.919% # ( 870, 1300 ] 387 0.025% 99.944% ( 1300, 1900 ] 629 0.041% 99.985% ( 1900, 2900 ] 226 0.015% 99.999% ( 2900, 4400 ] 13 0.001% 100.000%

** DB Stats ** Uptime(secs): 1072.3 total, 1072.3 interval Cumulative writes: 0 writes, 0 keys, 0 commit groups, 0.0 writes per commit group, ingest: 0.00 GB, 0.00 MB/s Cumulative WAL: 0 writes, 0 syncs, 0.00 writes per sync, written: 0.00 GB, 0.00 MB/s Cumulative stall: 00:00:0.000 H:M:S, 0.0 percent Interval writes: 0 writes, 0 keys, 0 commit groups, 0.0 writes per commit group, ingest: 0.00 MB, 0.00 MB/s Interval WAL: 0 writes, 0 syncs, 0.00 writes per sync, written: 0.00 MB, 0.00 MB/s Interval stall: 00:00:0.000 H:M:S, 0.0 percent

45459589 25 7194151454 9921764 173751 425 20742141 38549 0 4547118 9869938

I/O count: 518413

范围查询 open

Filter DISABLED No Compression open range query throughput: 661.763 rocksdb.db.seek.micros statistics Percentiles :=> 50 : 1509.746157 95 : 2683.052885 99 : 2883.373397 100 : 3721.000000 rocksdb.block.cache.miss COUNT : 4331192 rocksdb.block.cache.hit COUNT : 1480420 rocksdb.block.cache.add COUNT : 4331192 rocksdb.block.cache.add.failures COUNT : 0 rocksdb.block.cache.index.miss COUNT : 1427113 rocksdb.block.cache.index.hit COUNT : 1426244 rocksdb.block.cache.index.add COUNT : 1427113 rocksdb.block.cache.index.bytes.insert COUNT : 607073117800 rocksdb.block.cache.index.bytes.evict COUNT : 606988648528 rocksdb.block.cache.filter.miss COUNT : 0 rocksdb.block.cache.filter.hit COUNT : 0 rocksdb.block.cache.filter.add COUNT : 0 rocksdb.block.cache.filter.bytes.insert COUNT : 0 rocksdb.block.cache.filter.bytes.evict COUNT : 0 rocksdb.block.cache.data.miss COUNT : 2904079 rocksdb.block.cache.data.hit COUNT : 54176 rocksdb.block.cache.data.add COUNT : 2904079 rocksdb.block.cache.data.bytes.insert COUNT : 12145210456 rocksdb.block.cache.bytes.read COUNT : 555255537360 rocksdb.block.cache.bytes.write COUNT : 619218328256 rocksdb.bloom.filter.useful COUNT : 0 rocksdb.persistent.cache.hit COUNT : 0 rocksdb.persistent.cache.miss COUNT : 0 rocksdb.sim.block.cache.hit COUNT : 0 rocksdb.sim.block.cache.miss COUNT : 0 rocksdb.memtable.hit COUNT : 0 rocksdb.memtable.miss COUNT : 1000000 rocksdb.l0.hit COUNT : 0 rocksdb.l1.hit COUNT : 2132 rocksdb.l2andup.hit COUNT : 997868 rocksdb.compaction.key.drop.new COUNT : 0 rocksdb.compaction.key.drop.obsolete COUNT : 0 rocksdb.compaction.key.drop.range_del COUNT : 0 rocksdb.compaction.key.drop.user COUNT : 0 rocksdb.compaction.range_del.drop.obsolete COUNT : 0 rocksdb.compaction.optimized.del.drop.obsolete COUNT : 0 rocksdb.number.keys.written COUNT : 0 rocksdb.number.keys.read COUNT : 1000000 rocksdb.number.keys.updated COUNT : 0 rocksdb.bytes.written COUNT : 0 rocksdb.bytes.read COUNT : 1024000000 rocksdb.number.db.seek COUNT : 50000 rocksdb.number.db.next COUNT : 0 rocksdb.number.db.prev COUNT : 0 rocksdb.number.db.seek.found COUNT : 50000 rocksdb.number.db.next.found COUNT : 0 rocksdb.number.db.prev.found COUNT : 0 rocksdb.db.iter.bytes.read COUNT : 51600000 rocksdb.no.file.closes COUNT : 0 rocksdb.no.file.opens COUNT : 1583 rocksdb.no.file.errors COUNT : 0 rocksdb.l0.slowdown.micros COUNT : 0 rocksdb.memtable.compaction.micros COUNT : 0 rocksdb.l0.num.files.stall.micros COUNT : 0 rocksdb.stall.micros COUNT : 0 rocksdb.db.mutex.wait.micros COUNT : 0 rocksdb.rate.limit.delay.millis COUNT : 0 rocksdb.num.iterators COUNT : 0 rocksdb.number.multiget.get COUNT : 0 rocksdb.number.multiget.keys.read COUNT : 0 rocksdb.number.multiget.bytes.read COUNT : 0 rocksdb.number.deletes.filtered COUNT : 0 rocksdb.number.merge.failures COUNT : 0 rocksdb.bloom.filter.prefix.checked COUNT : 0 rocksdb.bloom.filter.prefix.useful COUNT : 0 rocksdb.number.reseeks.iteration COUNT : 0 rocksdb.getupdatessince.calls COUNT : 0 rocksdb.block.cachecompressed.miss COUNT : 0 rocksdb.block.cachecompressed.hit COUNT : 0 rocksdb.block.cachecompressed.add COUNT : 0 rocksdb.block.cachecompressed.add.failures COUNT : 0 rocksdb.wal.synced COUNT : 0 rocksdb.wal.bytes COUNT : 0 rocksdb.write.self COUNT : 0 rocksdb.write.other COUNT : 0 rocksdb.write.timeout COUNT : 0 rocksdb.write.wal COUNT : 0 rocksdb.compact.read.bytes COUNT : 0 rocksdb.compact.write.bytes COUNT : 0 rocksdb.flush.write.bytes COUNT : 0 rocksdb.number.direct.load.table.properties COUNT : 0 rocksdb.number.superversion_acquires COUNT : 1 rocksdb.number.superversion_releases COUNT : 0 rocksdb.number.superversion_cleanups COUNT : 0 rocksdb.number.block.compressed COUNT : 0 rocksdb.number.block.decompressed COUNT : 0 rocksdb.number.block.not_compressed COUNT : 0 rocksdb.merge.operation.time.nanos COUNT : 0 rocksdb.filter.operation.time.nanos COUNT : 0 rocksdb.row.cache.hit COUNT : 0 rocksdb.row.cache.miss COUNT : 0 rocksdb.read.amp.estimate.useful.bytes COUNT : 0 rocksdb.read.amp.total.read.bytes COUNT : 0 rocksdb.number.rate_limiter.drains COUNT : 0 rocksdb.db.get.micros statistics Percentiles :=> 50 : 1076.973931 95 : 1984.094354 99 : 2723.547898 100 : 4497.000000 rocksdb.db.write.micros statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.compaction.times.micros statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.subcompaction.setup.times.micros statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.table.sync.micros statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.compaction.outfile.sync.micros statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.wal.file.sync.micros statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.manifest.file.sync.micros statistics Percentiles :=> 50 : 307.000000 95 : 307.000000 99 : 307.000000 100 : 307.000000 rocksdb.table.open.io.micros statistics Percentiles :=> 50 : 1610.023041 95 : 2806.656347 99 : 7957.400000 100 : 12006.000000 rocksdb.db.multiget.micros statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.read.block.compaction.micros statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.read.block.get.micros statistics Percentiles :=> 50 : 104.737752 95 : 163.109112 99 : 168.836146 100 : 2514.000000 rocksdb.write.raw.block.micros statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.l0.slowdown.count statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.memtable.compaction.count statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.num.files.stall.count statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.hard.rate.limit.delay.count statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.soft.rate.limit.delay.count statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.numfiles.in.singlecompaction statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.db.seek.micros statistics Percentiles :=> 50 : 1509.746157 95 : 2683.052885 99 : 2883.373397 100 : 3721.000000 rocksdb.db.write.stall statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.sst.read.micros statistics Percentiles :=> 50 : 132.248805 95 : 678.569015 99 : 833.727059 100 : 10837.000000 rocksdb.num.subcompactions.scheduled statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.bytes.per.read statistics Percentiles :=> 50 : 1024.000000 95 : 1024.000000 99 : 1024.000000 100 : 1024.000000 rocksdb.bytes.per.write statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.bytes.per.multiget statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.bytes.compressed statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.bytes.decompressed statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.compression.times.nanos statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.decompression.times.nanos statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.read.num.merge_operands statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000

** Compaction Stats [default] **

Level Files Size Score Read(GB) Rn(GB) Rnp1(GB) Write(GB) Wnew(GB) Moved(GB) W-Amp Rd(MB/s) Wr(MB/s) Comp(sec) Comp(cnt) Avg(sec) KeyIn KeyDrop

L1 4/0 218.11 MB 0.9 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0 0 0.000 0 0 L2 53/0 2.46 GB 1.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0 0 0.000 0 0 L3 405/0 24.96 GB 1.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0 0 0.000 0 0 L4 1121/0 70.17 GB 0.3 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0 0 0.000 0 0 Sum 1583/0 97.80 GB 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0 0 0.000 0 0 Int 0/0 0.00 KB 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0 0 0.000 0 0 Uptime(secs): 1194.9 total, 1194.9 interval Flush(GB): cumulative 0.000, interval 0.000 AddFile(GB): cumulative 0.000, interval 0.000 AddFile(Total Files): cumulative 0, interval 0 AddFile(L0 Files): cumulative 0, interval 0 AddFile(Keys): cumulative 0, interval 0 Cumulative compaction: 0.00 GB write, 0.00 MB/s write, 0.00 GB read, 0.00 MB/s read, 0.0 seconds Interval compaction: 0.00 GB write, 0.00 MB/s write, 0.00 GB read, 0.00 MB/s read, 0.0 seconds Stalls(count): 0 level0_slowdown, 0 level0_slowdown_with_compaction, 0 level0_numfiles, 0 level0_numfiles_with_compaction, 0 stop for pending_compaction_bytes, 0 slowdown for pending_compaction_bytes, 0 memtable_compaction, 0 memtable_slowdown, interval 0 total count

** File Read Latency Histogram By Level [default] ** ** Level 1 read latency histogram (micros): Count: 691456 Average: 107.3161 StdDev: 50.52 Min: 68 Median: 103.3895 Max: 9078

Percentiles: P50: 103.39 P75: 133.08 P99: 328.89 P99.9: 686.93 P99.99: 852.65

( 51, 76 ] 55593 8.040% 8.040% ## ( 76, 110 ] 360159 52.087% 60.127% ########## ( 110, 170 ] 267361 38.666% 98.793% ######## ( 170, 250 ] 290 0.042% 98.835% ( 250, 380 ] 1876 0.271% 99.107% ( 380, 580 ] 5084 0.735% 99.842% ( 580, 870 ] 1089 0.157% 99.999% ( 6600, 9900 ] 4 0.001% 100.000%

** Level 2 read latency histogram (micros): Count: 847794 Average: 144.4460 StdDev: 121.74 Min: 65 Median: 107.8328 Max: 10837

Percentiles: P50: 107.83 P75: 149.09 P99: 571.57 P99.9: 827.91 P99.99: 868.06

( 51, 76 ] 70428 8.307% 8.307% ## ( 76, 110 ] 377534 44.531% 52.839% ######### ( 110, 170 ] 288363 34.013% 86.852% ####### ( 170, 250 ] 12401 1.463% 88.315% ( 250, 380 ] 24234 2.858% 91.173% # ( 380, 580 ] 69276 8.171% 99.344% ## ( 580, 870 ] 5510 0.650% 99.994% ( 870, 1300 ] 12 0.001% 99.996% ( 1300, 1900 ] 2 0.000% 99.996% ( 1900, 2900 ] 8 0.001% 99.997% ( 2900, 4400 ] 3 0.000% 99.997% ( 4400, 6600 ] 8 0.001% 99.998% ( 6600, 9900 ] 12 0.001% 100.000% ( 9900, 14000 ] 3 0.000% 100.000%

** Level 3 read latency histogram (micros): Count: 1249974 Average: 276.6674 StdDev: 208.52 Min: 65 Median: 151.4395 Max: 2834

Percentiles: P50: 151.44 P75: 476.95 P99: 836.02 P99.9: 868.16 P99.99: 1783.17

( 51, 76 ] 52814 4.225% 4.225% # ( 76, 110 ] 374972 29.998% 34.224% ###### ( 110, 170 ] 285526 22.843% 57.066% ##### ( 170, 250 ] 1252 0.100% 57.166% ( 250, 380 ] 24983 1.999% 59.165% ( 380, 580 ] 408336 32.668% 91.833% ####### ( 580, 870 ] 101484 8.119% 99.951% ## ( 870, 1300 ] 329 0.026% 99.978% ( 1300, 1900 ] 190 0.015% 99.993% ( 1900, 2900 ] 88 0.007% 100.000%

** Level 4 read latency histogram (micros): Count: 1543548 Average: 320.5750 StdDev: 226.17 Min: 66 Median: 169.8488 Max: 3729

Percentiles: P50: 169.85 P75: 517.12 P99: 852.15 P99.9: 1078.44 P99.99: 2191.77

( 51, 76 ] 46777 3.030% 3.030% # ( 76, 110 ] 392592 25.434% 28.465% ##### ( 110, 170 ] 333245 21.590% 50.054% #### ( 170, 250 ] 2490 0.161% 50.216% ( 250, 380 ] 16428 1.064% 51.280% ( 380, 580 ] 534021 34.597% 85.877% ####### ( 580, 870 ] 215846 13.984% 99.861% ### ( 870, 1300 ] 1249 0.081% 99.942% ( 1300, 1900 ] 687 0.045% 99.986% ( 1900, 2900 ] 201 0.013% 99.999% ( 2900, 4400 ] 15 0.001% 100.000%

** DB Stats ** Uptime(secs): 1194.9 total, 1194.9 interval Cumulative writes: 0 writes, 0 keys, 0 commit groups, 0.0 writes per commit group, ingest: 0.00 GB, 0.00 MB/s Cumulative WAL: 0 writes, 0 syncs, 0.00 writes per sync, written: 0.00 GB, 0.00 MB/s Cumulative stall: 00:00:0.000 H:M:S, 0.0 percent Interval writes: 0 writes, 0 keys, 0 commit groups, 0.0 writes per commit group, ingest: 0.00 MB, 0.00 MB/s Interval WAL: 0 writes, 0 syncs, 0.00 writes per sync, written: 0.00 MB, 0.00 MB/s Interval stall: 00:00:0.000 H:M:S, 0.0 percent

36972782 18 5979176801 8177260 161941 385 20642352 38200 0 3791505 8138772

I/O count: 522434

2. bloom 过滤器

单点查询

throughput: 1480.24 rocksdb.db.get.micros statistics Percentiles :=> 50 : 1130.399992 95 : 1863.636429 99 : 2679.271290 100 : 18371.000000 rocksdb.block.cache.miss COUNT : 309039278 rocksdb.block.cache.hit COUNT : 12148 rocksdb.block.cache.add COUNT : 4609065 rocksdb.block.cache.add.failures COUNT : 0 rocksdb.block.cache.index.miss COUNT : 1077069 rocksdb.block.cache.index.hit COUNT : 3415 rocksdb.block.cache.index.add COUNT : 1077069 rocksdb.block.cache.index.bytes.insert COUNT : 466662846600 rocksdb.block.cache.index.bytes.evict COUNT : 466661654432 rocksdb.block.cache.filter.miss COUNT : 2512342 rocksdb.block.cache.filter.hit COUNT : 8733 rocksdb.block.cache.filter.add COUNT : 2512342 rocksdb.block.cache.filter.bytes.insert COUNT : 265798353326 rocksdb.block.cache.filter.bytes.evict COUNT : 265118269703 rocksdb.block.cache.data.miss COUNT : 305449867 rocksdb.block.cache.data.hit COUNT : 0 rocksdb.block.cache.data.add COUNT : 1019654 rocksdb.block.cache.data.bytes.insert COUNT : 4257616096 rocksdb.block.cache.bytes.read COUNT : 2400642649 rocksdb.block.cache.bytes.write COUNT : 736718816022 rocksdb.bloom.filter.useful COUNT : 4598028 rocksdb.persistent.cache.hit COUNT : 0 rocksdb.persistent.cache.miss COUNT : 0 rocksdb.sim.block.cache.hit COUNT : 0 rocksdb.sim.block.cache.miss COUNT : 0 rocksdb.memtable.hit COUNT : 574 rocksdb.memtable.miss COUNT : 1049426 rocksdb.l0.hit COUNT : 1839 rocksdb.l1.hit COUNT : 2358 rocksdb.l2andup.hit COUNT : 995229 rocksdb.compaction.key.drop.new COUNT : 427 rocksdb.compaction.key.drop.obsolete COUNT : 0 rocksdb.compaction.key.drop.range_del COUNT : 0 rocksdb.compaction.key.drop.user COUNT : 0 rocksdb.compaction.range_del.drop.obsolete COUNT : 0 rocksdb.compaction.optimized.del.drop.obsolete COUNT : 0 rocksdb.number.keys.written COUNT : 100000000 rocksdb.number.keys.read COUNT : 1050000 rocksdb.number.keys.updated COUNT : 0 rocksdb.bytes.written COUNT : 104800000000 rocksdb.bytes.read COUNT : 1024000000 rocksdb.number.db.seek COUNT : 0 rocksdb.number.db.next COUNT : 0 rocksdb.number.db.prev COUNT : 0 rocksdb.number.db.seek.found COUNT : 0 rocksdb.number.db.next.found COUNT : 0 rocksdb.number.db.prev.found COUNT : 0 rocksdb.db.iter.bytes.read COUNT : 0 rocksdb.no.file.closes COUNT : 0 rocksdb.no.file.opens COUNT : 41456 rocksdb.no.file.errors COUNT : 0 rocksdb.l0.slowdown.micros COUNT : 0 rocksdb.memtable.compaction.micros COUNT : 0 rocksdb.l0.num.files.stall.micros COUNT : 0 rocksdb.stall.micros COUNT : 2248090487 rocksdb.db.mutex.wait.micros COUNT : 0 rocksdb.rate.limit.delay.millis COUNT : 0 rocksdb.num.iterators COUNT : 0 rocksdb.number.multiget.get COUNT : 0 rocksdb.number.multiget.keys.read COUNT : 0 rocksdb.number.multiget.bytes.read COUNT : 0 rocksdb.number.deletes.filtered COUNT : 0 rocksdb.number.merge.failures COUNT : 0 rocksdb.bloom.filter.prefix.checked COUNT : 0 rocksdb.bloom.filter.prefix.useful COUNT : 0 rocksdb.number.reseeks.iteration COUNT : 0 rocksdb.getupdatessince.calls COUNT : 0 rocksdb.block.cachecompressed.miss COUNT : 0 rocksdb.block.cachecompressed.hit COUNT : 0 rocksdb.block.cachecompressed.add COUNT : 0 rocksdb.block.cachecompressed.add.failures COUNT : 0 rocksdb.wal.synced COUNT : 0 rocksdb.wal.bytes COUNT : 104800000000 rocksdb.write.self COUNT : 100000000 rocksdb.write.other COUNT : 0 rocksdb.write.timeout COUNT : 0 rocksdb.write.wal COUNT : 200000000 rocksdb.compact.read.bytes COUNT : 1324925221313 rocksdb.compact.write.bytes COUNT : 1280986265088 rocksdb.flush.write.bytes COUNT : 105192257680 rocksdb.number.direct.load.table.properties COUNT : 0 rocksdb.number.superversion_acquires COUNT : 311 rocksdb.number.superversion_releases COUNT : 308 rocksdb.number.superversion_cleanups COUNT : 307 rocksdb.number.block.compressed COUNT : 0 rocksdb.number.block.decompressed COUNT : 0 rocksdb.number.block.not_compressed COUNT : 0 rocksdb.merge.operation.time.nanos COUNT : 0 rocksdb.filter.operation.time.nanos COUNT : 0 rocksdb.row.cache.hit COUNT : 0 rocksdb.row.cache.miss COUNT : 0 rocksdb.read.amp.estimate.useful.bytes COUNT : 0 rocksdb.read.amp.total.read.bytes COUNT : 0 rocksdb.number.rate_limiter.drains COUNT : 0 rocksdb.db.get.micros statistics Percentiles :=> 50 : 1130.399992 95 : 1863.636429 99 : 2679.271290 100 : 18371.000000 rocksdb.db.write.micros statistics Percentiles :=> 50 : 4.780012 95 : 19.777859 99 : 1098.236474 100 : 14062.000000 rocksdb.compaction.times.micros statistics Percentiles :=> 50 : 843368.091762 95 : 1982105.263158 99 : 6767882.352941 100 : 7806996.000000 rocksdb.subcompaction.setup.times.micros statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.table.sync.micros statistics Percentiles :=> 50 : 453.993808 95 : 1087.986111 99 : 2088.888889 100 : 4219.000000 rocksdb.compaction.outfile.sync.micros statistics Percentiles :=> 50 : 326.390808 95 : 783.074169 99 : 1698.693878 100 : 15456.000000 rocksdb.wal.file.sync.micros statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.manifest.file.sync.micros statistics Percentiles :=> 50 : 294.308814 95 : 532.961373 99 : 778.957576 100 : 20747.000000 rocksdb.table.open.io.micros statistics Percentiles :=> 50 : 1013.691163 95 : 1809.195302 99 : 2707.411301 100 : 14963.000000 rocksdb.db.multiget.micros statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.read.block.compaction.micros statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.read.block.get.micros statistics Percentiles :=> 50 : 103.683874 95 : 164.490373 99 : 210.974179 100 : 4125.000000 rocksdb.write.raw.block.micros statistics Percentiles :=> 50 : 0.608095 95 : 1.997809 99 : 3.746680 100 : 84636.000000 rocksdb.l0.slowdown.count statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.memtable.compaction.count statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.num.files.stall.count statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.hard.rate.limit.delay.count statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.soft.rate.limit.delay.count statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.numfiles.in.singlecompaction statistics Percentiles :=> 50 : 1.000000 95 : 1.178319 99 : 17.180500 100 : 26.000000 rocksdb.db.seek.micros statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.db.write.stall statistics Percentiles :=> 50 : 0.529673 95 : 915.755958 99 : 1223.453079 100 : 13991.000000 rocksdb.sst.read.micros statistics Percentiles :=> 50 : 217.993679 95 : 527.355345 99 : 731.775890 100 : 15213.000000 rocksdb.num.subcompactions.scheduled statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.bytes.per.read statistics Percentiles :=> 50 : 1024.000000 95 : 1024.000000 99 : 1024.000000 100 : 1024.000000 rocksdb.bytes.per.write statistics Percentiles :=> 50 : 1048.000000 95 : 1048.000000 99 : 1048.000000 100 : 1048.000000 rocksdb.bytes.per.multiget statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.bytes.compressed statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.bytes.decompressed statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.compression.times.nanos statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.decompression.times.nanos statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.read.num.merge_operands statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000

** Compaction Stats [default] **

Level Files Size Score Read(GB) Rn(GB) Rnp1(GB) Write(GB) Wnew(GB) Moved(GB) W-Amp Rd(MB/s) Wr(MB/s) Comp(sec) Comp(cnt) Avg(sec) KeyIn KeyDrop

L0 3/0 184.63 MB 0.8 0.0 0.0 0.0 98.0 98.0 0.0 1.0 0.0 461.0 218 1630 0.133 0 0 L1 5/0 240.20 MB 0.9 193.5 97.8 95.7 193.5 97.8 0.0 2.0 406.3 406.3 488 111 4.393 197M 19 L2 53/0 2.47 GB 1.0 536.8 97.0 439.8 536.8 97.0 0.5 5.5 380.5 380.5 1444 1521 0.950 547M 110 L3 404/0 24.95 GB 1.0 406.9 77.3 329.6 406.9 77.3 17.7 5.3 393.4 393.4 1059 1165 0.909 415M 256 L4 1115/0 70.07 GB 0.3 55.8 21.9 33.9 55.8 21.9 48.1 2.5 372.7 372.6 153 327 0.469 56M 42 Sum 1580/0 97.91 GB 0.0 1193.1 294.1 899.0 1291.0 392.0 66.4 13.2 363.3 393.2 3362 4754 0.707 1217M 427 Int 0/0 0.00 KB 0.0 58.5 13.6 44.9 59.7 14.8 12.7 49.7 382.5 390.4 157 215 0.728 59M 33 Uptime(secs): 4303.7 total, 1289.5 interval Flush(GB): cumulative 97.967, interval 1.202 AddFile(GB): cumulative 0.000, interval 0.000 AddFile(Total Files): cumulative 0, interval 0 AddFile(L0 Files): cumulative 0, interval 0 AddFile(Keys): cumulative 0, interval 0 Cumulative compaction: 1290.96 GB write, 307.17 MB/s write, 1193.05 GB read, 283.87 MB/s read, 3362.4 seconds Interval compaction: 59.69 GB write, 47.40 MB/s write, 58.49 GB read, 46.45 MB/s read, 156.6 seconds Stalls(count): 1398 level0_slowdown, 96 level0_slowdown_with_compaction, 0 level0_numfiles, 0 level0_numfiles_with_compaction, 0 stop for pending_compaction_bytes, 3246 slowdown for pending_compaction_bytes, 0 memtable_compaction, 0 memtable_slowdown, interval 93 total count

** File Read Latency Histogram By Level [default] ** ** Level 0 read latency histogram (micros): Count: 20214 Average: 170.2285 StdDev: 165.18 Min: 68 Median: 118.1592 Max: 6594

Percentiles: P50: 118.16 P75: 161.43 P99: 820.52 P99.9: 1285.14 P99.99: 2895.72

( 51, 76 ] 1781 8.811% 8.811% ## ( 76, 110 ] 7373 36.475% 45.285% ####### ( 110, 170 ] 7008 34.669% 79.954% ####### ( 170, 250 ] 530 2.622% 82.576% # ( 250, 380 ] 1225 6.060% 88.637% # ( 380, 580 ] 1647 8.148% 96.784% ## ( 580, 870 ] 540 2.671% 99.456% # ( 870, 1300 ] 93 0.460% 99.916% ( 1300, 1900 ] 10 0.049% 99.965% ( 1900, 2900 ] 5 0.025% 99.990% ( 2900, 4400 ] 1 0.005% 99.995% ( 4400, 6600 ] 1 0.005% 100.000%

** Level 1 read latency histogram (micros): Count: 470338 Average: 235.5529 StdDev: 67.23 Min: 19 Median: 224.8040 Max: 15213

Percentiles: P50: 224.80 P75: 263.91 P99: 475.54 P99.9: 897.28 P99.99: 1961.44

( 15, 22 ] 5 0.001% 0.001% ( 22, 34 ] 31 0.007% 0.008% ( 34, 51 ] 77 0.016% 0.024% ( 51, 76 ] 644 0.137% 0.161% ( 76, 110 ] 2488 0.529% 0.690% ( 110, 170 ] 4876 1.037% 1.727% ( 170, 250 ] 331433 70.467% 72.194% ############## ( 250, 380 ] 123339 26.223% 98.417% ##### ( 380, 580 ] 5739 1.220% 99.637% ( 580, 870 ] 1212 0.258% 99.895% ( 870, 1300 ] 373 0.079% 99.974% ( 1300, 1900 ] 72 0.015% 99.990% ( 1900, 2900 ] 32 0.007% 99.996% ( 2900, 4400 ] 14 0.003% 99.999% ( 4400, 6600 ] 2 0.000% 100.000% ( 14000, 22000 ] 1 0.000% 100.000%

** Level 2 read latency histogram (micros): Count: 720652 Average: 209.2450 StdDev: 99.55 Min: 19 Median: 201.5082 Max: 13293

Percentiles: P50: 201.51 P75: 240.51 P99: 530.72 P99.9: 1023.88 P99.99: 3465.58

( 15, 22 ] 26 0.004% 0.004% ( 22, 34 ] 128 0.018% 0.021% ( 34, 51 ] 294 0.041% 0.062% ( 51, 76 ] 3992 0.554% 0.616% ( 76, 110 ] 26437 3.668% 4.285% # ( 110, 170 ] 183899 25.518% 29.803% ##### ( 170, 250 ] 369555 51.281% 81.084% ########## ( 250, 380 ] 115838 16.074% 97.158% ### ( 380, 580 ] 17617 2.445% 99.602% ( 580, 870 ] 1936 0.269% 99.871% ( 870, 1300 ] 585 0.081% 99.952% ( 1300, 1900 ] 128 0.018% 99.970% ( 1900, 2900 ] 111 0.015% 99.985% ( 2900, 4400 ] 90 0.012% 99.998% ( 4400, 6600 ] 6 0.001% 99.999% ( 6600, 9900 ] 3 0.000% 99.999% ( 9900, 14000 ] 7 0.001% 100.000%

** Level 3 read latency histogram (micros): Count: 2154501 Average: 247.6514 StdDev: 146.71 Min: 19 Median: 221.3222 Max: 13938

Percentiles: P50: 221.32 P75: 329.57 P99: 773.13 P99.9: 1181.63 P99.99: 2805.79

( 15, 22 ] 29 0.001% 0.001% ( 22, 34 ] 47 0.002% 0.004% ( 34, 51 ] 24 0.001% 0.005% ( 51, 76 ] 50131 2.327% 2.331% ( 76, 110 ] 298212 13.841% 16.173% ### ( 110, 170 ] 288623 13.396% 29.569% ### ( 170, 250 ] 686150 31.847% 61.416% ###### ( 250, 380 ] 478117 22.192% 83.608% #### ( 380, 580 ] 298181 13.840% 97.448% ### ( 580, 870 ] 50217 2.331% 99.779% ( 870, 1300 ] 3609 0.168% 99.946% ( 1300, 1900 ] 672 0.031% 99.977% ( 1900, 2900 ] 302 0.014% 99.991% ( 2900, 4400 ] 166 0.008% 99.999% ( 4400, 6600 ] 9 0.000% 99.999% ( 6600, 9900 ] 4 0.000% 100.000% ( 9900, 14000 ] 8 0.000% 100.000%

** Level 4 read latency histogram (micros): Count: 1219261 Average: 251.7676 StdDev: 152.73 Min: 19 Median: 225.0891 Max: 12944

Percentiles: P50: 225.09 P75: 352.03 P99: 752.40 P99.9: 1193.57 P99.99: 2872.93

( 15, 22 ] 3 0.000% 0.000% ( 22, 34 ] 4 0.000% 0.001% ( 34, 51 ] 3 0.000% 0.001% ( 51, 76 ] 35062 2.876% 2.876% # ( 76, 110 ] 210607 17.273% 20.150% ### ( 110, 170 ] 163921 13.444% 33.594% ### ( 170, 250 ] 290483 23.825% 57.419% ##### ( 250, 380 ] 273138 22.402% 79.821% #### ( 380, 580 ] 219972 18.041% 97.862% #### ( 580, 870 ] 23340 1.914% 99.776% ( 870, 1300 ] 2005 0.164% 99.941% ( 1300, 1900 ] 424 0.035% 99.975% ( 1900, 2900 ] 182 0.015% 99.990% ( 2900, 4400 ] 109 0.009% 99.999% ( 4400, 6600 ] 6 0.000% 100.000% ( 9900, 14000 ] 2 0.000% 100.000%

** DB Stats ** Uptime(secs): 4303.7 total, 1289.5 interval Cumulative writes: 100M writes, 100M keys, 100M commit groups, 1.0 writes per commit group, ingest: 97.60 GB, 23.22 MB/s Cumulative WAL: 100M writes, 0 syncs, 100000000.00 writes per sync, written: 97.60 GB, 23.22 MB/s Cumulative stall: 00:37:28.090 H:M:S, 52.2 percent Interval writes: 1229K writes, 1229K keys, 1229K commit groups, 1.0 writes per commit group, ingest: 1228.57 MB, 0.95 MB/s Interval WAL: 1229K writes, 0 syncs, 1229247.00 writes per sync, written: 1.20 MB, 0.95 MB/s Interval stall: 00:00:43.933 H:M:S, 3.4 percent

10358005520 6002 196186905168 2254500231 1025791168 229369 127443302253 2592493142 0 198095258 648289708

I/O count: 126149

范围查询 closed

Using rocksdb.BuiltinBloomFilter No Compression closed range query throughput: 432.434 rocksdb.db.seek.micros statistics Percentiles :=> 50 : 2385.102631 95 : 2893.738132 99 : 4062.618084 100 : 4093.000000 rocksdb.block.cache.miss COUNT : 4954786 rocksdb.block.cache.hit COUNT : 1594163 rocksdb.block.cache.add COUNT : 3660610 rocksdb.block.cache.add.failures COUNT : 0 rocksdb.block.cache.index.miss COUNT : 1164052 rocksdb.block.cache.index.hit COUNT : 43224 rocksdb.block.cache.index.add COUNT : 1164052 rocksdb.block.cache.index.bytes.insert COUNT : 500267958336 rocksdb.block.cache.index.bytes.evict COUNT : 500267958336 rocksdb.block.cache.filter.miss COUNT : 1290604 rocksdb.block.cache.filter.hit COUNT : 1546639 rocksdb.block.cache.filter.add COUNT : 1290604 rocksdb.block.cache.filter.bytes.insert COUNT : 142640936220 rocksdb.block.cache.filter.bytes.evict COUNT : 142463171932 rocksdb.block.cache.data.miss COUNT : 2500130 rocksdb.block.cache.data.hit COUNT : 4300 rocksdb.block.cache.data.add COUNT : 1205954 rocksdb.block.cache.data.bytes.insert COUNT : 5038838992 rocksdb.block.cache.bytes.read COUNT : 179148852747 rocksdb.block.cache.bytes.write COUNT : 647947733548 rocksdb.bloom.filter.useful COUNT : 1630105 rocksdb.persistent.cache.hit COUNT : 0 rocksdb.persistent.cache.miss COUNT : 0 rocksdb.sim.block.cache.hit COUNT : 0 rocksdb.sim.block.cache.miss COUNT : 0 rocksdb.memtable.hit COUNT : 0 rocksdb.memtable.miss COUNT : 1000000 rocksdb.l0.hit COUNT : 0 rocksdb.l1.hit COUNT : 2200 rocksdb.l2andup.hit COUNT : 997800 rocksdb.compaction.key.drop.new COUNT : 0 rocksdb.compaction.key.drop.obsolete COUNT : 0 rocksdb.compaction.key.drop.range_del COUNT : 0 rocksdb.compaction.key.drop.user COUNT : 0 rocksdb.compaction.range_del.drop.obsolete COUNT : 0 rocksdb.compaction.optimized.del.drop.obsolete COUNT : 0 rocksdb.number.keys.written COUNT : 0 rocksdb.number.keys.read COUNT : 1000000 rocksdb.number.keys.updated COUNT : 0 rocksdb.bytes.written COUNT : 0 rocksdb.bytes.read COUNT : 1024000000 rocksdb.number.db.seek COUNT : 50000 rocksdb.number.db.next COUNT : 0 rocksdb.number.db.prev COUNT : 0 rocksdb.number.db.seek.found COUNT : 24956 rocksdb.number.db.next.found COUNT : 0 rocksdb.number.db.prev.found COUNT : 0 rocksdb.db.iter.bytes.read COUNT : 25754592 rocksdb.no.file.closes COUNT : 0 rocksdb.no.file.opens COUNT : 1760 rocksdb.no.file.errors COUNT : 0 rocksdb.l0.slowdown.micros COUNT : 0 rocksdb.memtable.compaction.micros COUNT : 0 rocksdb.l0.num.files.stall.micros COUNT : 0 rocksdb.stall.micros COUNT : 0 rocksdb.db.mutex.wait.micros COUNT : 0 rocksdb.rate.limit.delay.millis COUNT : 0 rocksdb.num.iterators COUNT : 0 rocksdb.number.multiget.get COUNT : 0 rocksdb.number.multiget.keys.read COUNT : 0 rocksdb.number.multiget.bytes.read COUNT : 0 rocksdb.number.deletes.filtered COUNT : 0 rocksdb.number.merge.failures COUNT : 0 rocksdb.bloom.filter.prefix.checked COUNT : 0 rocksdb.bloom.filter.prefix.useful COUNT : 0 rocksdb.number.reseeks.iteration COUNT : 0 rocksdb.getupdatessince.calls COUNT : 0 rocksdb.block.cachecompressed.miss COUNT : 0 rocksdb.block.cachecompressed.hit COUNT : 0 rocksdb.block.cachecompressed.add COUNT : 0 rocksdb.block.cachecompressed.add.failures COUNT : 0 rocksdb.wal.synced COUNT : 0 rocksdb.wal.bytes COUNT : 0 rocksdb.write.self COUNT : 0 rocksdb.write.other COUNT : 0 rocksdb.write.timeout COUNT : 0 rocksdb.write.wal COUNT : 0 rocksdb.compact.read.bytes COUNT : 5581154591 rocksdb.compact.write.bytes COUNT : 5506159800 rocksdb.flush.write.bytes COUNT : 0 rocksdb.number.direct.load.table.properties COUNT : 0 rocksdb.number.superversion_acquires COUNT : 3 rocksdb.number.superversion_releases COUNT : 2 rocksdb.number.superversion_cleanups COUNT : 2 rocksdb.number.block.compressed COUNT : 0 rocksdb.number.block.decompressed COUNT : 0 rocksdb.number.block.not_compressed COUNT : 0 rocksdb.merge.operation.time.nanos COUNT : 0 rocksdb.filter.operation.time.nanos COUNT : 0 rocksdb.row.cache.hit COUNT : 0 rocksdb.row.cache.miss COUNT : 0 rocksdb.read.amp.estimate.useful.bytes COUNT : 0 rocksdb.read.amp.total.read.bytes COUNT : 0 rocksdb.number.rate_limiter.drains COUNT : 0 rocksdb.db.get.micros statistics Percentiles :=> 50 : 997.889590 95 : 1664.635517 99 : 1865.960909 100 : 13936.000000 rocksdb.db.write.micros statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.compaction.times.micros statistics Percentiles :=> 50 : 945000.000000 95 : 1526046.000000 99 : 1526046.000000 100 : 1526046.000000 rocksdb.subcompaction.setup.times.micros statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.table.sync.micros statistics Percentiles :=> 50 : 437.000000 95 : 437.000000 99 : 437.000000 100 : 437.000000 rocksdb.compaction.outfile.sync.micros statistics Percentiles :=> 50 : 324.848485 95 : 558.461538 99 : 1915.000000 100 : 1915.000000 rocksdb.wal.file.sync.micros statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.manifest.file.sync.micros statistics Percentiles :=> 50 : 322.222222 95 : 2200.000000 99 : 2543.000000 100 : 2543.000000 rocksdb.table.open.io.micros statistics Percentiles :=> 50 : 1516.746411 95 : 2726.923077 99 : 7463.076923 100 : 17829.000000 rocksdb.db.multiget.micros statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.read.block.compaction.micros statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.read.block.get.micros statistics Percentiles :=> 50 : 104.247163 95 : 163.236767 99 : 169.095890 100 : 2452.000000 rocksdb.write.raw.block.micros statistics Percentiles :=> 50 : 0.570880 95 : 1.770671 99 : 2.849481 100 : 4033.000000 rocksdb.l0.slowdown.count statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.memtable.compaction.count statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.num.files.stall.count statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.hard.rate.limit.delay.count statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.soft.rate.limit.delay.count statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.numfiles.in.singlecompaction statistics Percentiles :=> 50 : 1.000000 95 : 3.350000 99 : 3.870000 100 : 4.000000 rocksdb.db.seek.micros statistics Percentiles :=> 50 : 2385.102631 95 : 2893.738132 99 : 4062.618084 100 : 4093.000000 rocksdb.db.write.stall statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.sst.read.micros statistics Percentiles :=> 50 : 229.246258 95 : 563.726406 99 : 783.919701 100 : 12757.000000 rocksdb.num.subcompactions.scheduled statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.bytes.per.read statistics Percentiles :=> 50 : 1024.000000 95 : 1024.000000 99 : 1024.000000 100 : 1024.000000 rocksdb.bytes.per.write statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.bytes.per.multiget statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.bytes.compressed statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.bytes.decompressed statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.compression.times.nanos statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.decompression.times.nanos statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000 rocksdb.read.num.merge_operands statistics Percentiles :=> 50 : 0.000000 95 : 0.000000 99 : 0.000000 100 : 0.000000

** Compaction Stats [default] **

Level Files Size Score Read(GB) Rn(GB) Rnp1(GB) Write(GB) Wnew(GB) Moved(GB) W-Amp Rd(MB/s) Wr(MB/s) Comp(sec) Comp(cnt) Avg(sec) KeyIn KeyDrop

L0 0/0 0.00 KB 0.0 0.0 0.0 0.0 0.1 0.1 0.0 1.0 0.0 427.2 0 1 0.135 0 0 L1 4/0 224.39 MB 0.9 0.5 0.2 0.2 0.5 0.2 0.0 2.0 385.6 385.6 1 1 1.251 480K 0 L2 51/0 2.47 GB 1.0 1.4 0.3 1.2 1.4 0.3 0.0 5.7 413.1 413.1 4 4 0.892 1469K 0 L3 403/0 24.95 GB 1.0 1.9 0.3 1.6 1.9 0.3 0.0 7.5 417.4 417.4 5 4 1.165 1939K 0 L4 1120/0 70.33 GB 0.3 1.3 0.3 1.0 1.3 0.3 0.0 5.0 389.5 389.5 3 4 0.829 1286K 0 Sum 1578/0 97.97 GB 0.0 5.1 1.0 4.1 5.1 1.0 0.0 91.1 401.6 406.1 13 14 0.924 5176K 0 Int 0/0 0.00 KB 0.0 5.1 1.0 4.1 5.1 1.0 0.0 5445635472.0 405.9 405.8 13 13 0.984 5176K 0 Uptime(secs): 1110.0 total, 1109.3 interval Flush(GB): cumulative 0.056, interval 0.000 AddFile(GB): cumulative 0.000, interval 0.000 AddFile(Total Files): cumulative 0, interval 0 AddFile(L0 Files): cumulative 0, interval 0 AddFile(Keys): cumulative 0, interval 0 Cumulative compaction: 5.13 GB write, 4.73 MB/s write, 5.07 GB read, 4.68 MB/s read, 12.9 seconds Interval compaction: 5.07 GB write, 4.68 MB/s write, 5.07 GB read, 4.68 MB/s read, 12.8 seconds Stalls(count): 0 level0_slowdown, 0 level0_slowdown_with_compaction, 0 level0_numfiles, 0 level0_numfiles_with_compaction, 0 stop for pending_compaction_bytes, 0 slowdown for pending_compaction_bytes, 0 memtable_compaction, 0 memtable_slowdown, interval 0 total count

** File Read Latency Histogram By Level [default] ** ** Level 0 read latency histogram (micros): Count: 12 Average: 4120.9167 StdDev: 3690.48 Min: 154 Median: 1300.0000 Max: 8650

Percentiles: P50: 1300.00 P75: 8250.00 P99: 8650.00 P99.9: 8650.00 P99.99: 8650.00

( 110, 170 ] 1 8.333% 8.333% ## ( 170, 250 ] 1 8.333% 16.667% ## ( 250, 380 ] 2 16.667% 33.333% ### ( 380, 580 ] 1 8.333% 41.667% ## ( 870, 1300 ] 1 8.333% 50.000% ## ( 6600, 9900 ] 6 50.000% 100.000% ##########

** Level 1 read latency histogram (micros): Count: 112908 Average: 236.9306 StdDev: 155.66 Min: 68 Median: 208.1038 Max: 9588

Percentiles: P50: 208.10 P75: 376.28 P99: 572.15 P99.9: 579.56 P99.99: 848.31

( 51, 76 ] 8232 7.291% 7.291% # ( 76, 110 ] 33032 29.256% 36.547% ###### ( 110, 170 ] 11995 10.624% 47.170% ## ( 170, 250 ] 6708 5.941% 53.111% # ( 250, 380 ] 25442 22.533% 75.645% ##### ( 380, 580 ] 27447 24.309% 99.954% ##### ( 580, 870 ] 44 0.039% 99.993% ( 870, 1300 ] 2 0.002% 99.995% ( 2900, 4400 ] 1 0.001% 99.996% ( 6600, 9900 ] 5 0.004% 100.000%

** Level 2 read latency histogram (micros): Count: 234452 Average: 213.1414 StdDev: 131.10 Min: 32 Median: 188.8882 Max: 9979

Percentiles: P50: 188.89 P75: 286.41 P99: 563.02 P99.9: 680.76 P99.99: 2756.16

( 22, 34 ] 2 0.001% 0.001% ( 34, 51 ] 1 0.000% 0.001% ( 51, 76 ] 5663 2.415% 2.417% ( 76, 110 ] 44335 18.910% 21.327% #### ( 110, 170 ] 53264 22.719% 44.045% ##### ( 170, 250 ] 59131 25.221% 69.266% ##### ( 250, 380 ] 48001 20.474% 89.740% #### ( 380, 580 ] 23725 10.119% 99.859% ## ( 580, 870 ] 275 0.117% 99.977% ( 870, 1300 ] 9 0.004% 99.980% ( 1300, 1900 ] 8 0.003% 99.984% ( 1900, 2900 ] 17 0.007% 99.991% ( 2900, 4400 ] 3 0.001% 99.992% ( 4400, 6600 ] 7 0.003% 99.995% ( 6600, 9900 ] 10 0.004% 100.000% ( 9900, 14000 ] 1 0.000% 100.000%

** Level 3 read latency histogram (micros): Count: 1070906 Average: 250.1457 StdDev: 134.43 Min: 20 Median: 223.8383 Max: 3687

Percentiles: P50: 223.84 P75: 340.89 P99: 663.22 P99.9: 858.19 P99.99: 1723.21

( 15, 22 ] 1 0.000% 0.000% ( 22, 34 ] 1 0.000% 0.000% ( 34, 51 ] 1 0.000% 0.000% ( 51, 76 ] 25441 2.376% 2.376% ( 76, 110 ] 155441 14.515% 16.891% ### ( 110, 170 ] 127775 11.931% 28.822% ## ( 170, 250 ] 336999 31.469% 60.291% ###### ( 250, 380 ] 225296 21.038% 81.329% #### ( 380, 580 ] 185128 17.287% 98.616% ### ( 580, 870 ] 14336 1.339% 99.955% ( 870, 1300 ] 260 0.024% 99.979% ( 1300, 1900 ] 170 0.016% 99.995% ( 1900, 2900 ] 54 0.005% 100.000% ( 2900, 4400 ] 4 0.000% 100.000%

** Level 4 read latency histogram (micros): Count: 2243851 Average: 279.1160 StdDev: 170.54 Min: 20 Median: 240.0615 Max: 12757

Percentiles: P50: 240.06 P75: 417.01 P99: 810.47 P99.9: 868.29 P99.99: 1983.11

( 15, 22 ] 1 0.000% 0.000% ( 22, 34 ] 1 0.000% 0.000% ( 51, 76 ] 58056 2.587% 2.587% # ( 76, 110 ] 401366 17.887% 20.475% #### ( 110, 170 ] 310234 13.826% 34.301% ### ( 170, 250 ] 402238 17.926% 52.227% #### ( 250, 380 ] 406980 18.138% 70.365% #### ( 380, 580 ] 562042 25.048% 95.413% ##### ( 580, 870 ] 101285 4.514% 99.927% # ( 870, 1300 ] 761 0.034% 99.960% ( 1300, 1900 ] 643 0.029% 99.989% ( 1900, 2900 ] 236 0.011% 100.000% ( 2900, 4400 ] 8 0.000% 100.000% ( 9900, 14000 ] 1 0.000% 100.000%

** DB Stats ** Uptime(secs): 1110.0 total, 1109.3 interval Cumulative writes: 0 writes, 0 keys, 0 commit groups, 0.0 writes per commit group, ingest: 0.00 GB, 0.00 MB/s Cumulative WAL: 0 writes, 0 syncs, 0.00 writes per sync, written: 0.00 GB, 0.00 MB/s Cumulative stall: 00:00:0.000 H:M:S, 0.0 percent Interval writes: 0 writes, 0 keys, 0 commit groups, 0.0 writes per commit group, ingest: 0.00 MB, 0.00 MB/s Interval WAL: 0 writes, 0 syncs, 0.00 writes per sync, written: 0.00 MB, 0.00 MB/s Interval stall: 00:00:0.000 H:M:S, 0.0 percent

21385892 18 3495504910 4739817 146816 330 20514505 37758 0 2227254 4736410

I/O count: 940629

范围查询 open